Which of the following virus types changes some of its characteristics as it spreads?

Boot Sector

Parasitic

Stealth

Polymorphic

A Polymorphic virus produces varied but operational copies of itself in hopes of evading anti-virus software.

The following answers are incorrect:

boot sector. Is incorrect because it is not the best answer. A boot sector virus attacks the boot sector of a drive. It describes the type of attack of the virus and not the characteristics of its composition.

parasitic. Is incorrect because it is not the best answer. A parasitic virus attaches itself to other files but does not change its characteristics.

stealth. Is incorrect because it is not the best answer. A stealth virus attempts to hide changes of the affected files but not itself.

Which of the following technologies is a target of XSS or CSS (Cross-Site Scripting) attacks?

Web Applications

Intrusion Detection Systems

Firewalls

DNS Servers

XSS or Cross-Site Scripting is a threat to web applications where malicious code is placed on a website that attacks the use using their existing authenticated session status.

Cross-Site Scripting attacks are a type of injection problem, in which malicious scripts are injected into the otherwise benign and trusted web sites. Cross-site scripting (XSS) attacks occur when an attacker uses a web application to send malicious code, generally in the form of a browser side script, to a different end user. Flaws that allow these attacks to succeed are quite widespread and occur anywhere a web application uses input from a user in the output it generates without validating or encoding it.

An attacker can use XSS to send a malicious script to an unsuspecting user. The end user’s browser has no way to know that the script should not be trusted, and will execute the script. Because it thinks the script came from a trusted source, the malicious script can access any cookies, session tokens, or other sensitive information retained by your browser and used with that site. These scripts can even rewrite the content of the HTML page.

Mitigation:

Configure your IPS - Intrusion Prevention System to detect and suppress this traffic.

Input Validation on the web application to normalize inputted data.

Set web apps to bind session cookies to the IP Address of the legitimate user and only permit that IP Address to use that cookie.

See the XSS (Cross Site Scripting) Prevention Cheat Sheet

See the Abridged XSS Prevention Cheat Sheet

See the DOM based XSS Prevention Cheat Sheet

See the OWASP Development Guide article on Phishing.

See the OWASP Development Guide article on Data Validation.

The following answers are incorrect:

Intrusion Detection Systems: Sorry. IDS Systems aren't usually the target of XSS attacks but a properly-configured IDS/IPS can "detect and report on malicious string and suppress the TCP connection in an attempt to mitigate the threat.

Firewalls: Sorry. Firewalls aren't usually the target of XSS attacks.

DNS Servers: Same as above, DNS Servers aren't usually targeted in XSS attacks but they play a key role in the domain name resolution in the XSS attack process.

The following reference(s) was used to create this question:

CCCure Holistic Security+ CBT and Curriculum

and

https://www.owasp.org/index.php/Cross-site_Scripting_%28XSS%29

What best describes a scenario when an employee has been shaving off pennies from multiple accounts and depositing the funds into his own bank account?

Data fiddling

Data diddling

Salami techniques

Trojan horses

Source: HARRIS, Shon, All-In-One CISSP Certification Exam Guide, McGraw-Hill/Osborne, 2001, Page 644.

Virus scanning and content inspection of SMIME encrypted e-mail without doing any further processing is:

Not possible

Only possible with key recovery scheme of all user keys

It is possible only if X509 Version 3 certificates are used

It is possible only by "brute force" decryption

Content security measures presumes that the content is available in cleartext on the central mail server.

Encrypted emails have to be decrypted before it can be filtered (e.g. to detect viruses), so you need the decryption key on the central "crypto mail server".

There are several ways for such key management, e.g. by message or key recovery methods. However, that would certainly require further processing in order to achieve such goal.

Which of the following is defined as an Internet, IPsec, key-establishment protocol, partly based on OAKLEY, that is intended for putting in place authenticated keying material for use with ISAKMP and for other security associations?

Internet Key exchange (IKE)

Security Association Authentication Protocol (SAAP)

Simple Key-management for Internet Protocols (SKIP)

Key Exchange Algorithm (KEA)

RFC 2828 (Internet Security Glossary) defines IKE as an Internet, IPsec, key-establishment protocol (partly based on OAKLEY) that is intended for putting in place authenticated keying material for use with ISAKMP and for other security associations, such as in AH and ESP.

The following are incorrect answers:

SKIP is a key distribution protocol that uses hybrid encryption to convey session keys that are used to encrypt data in IP packets.

The Key Exchange Algorithm (KEA) is defined as a key agreement algorithm that is similar to the Diffie-Hellman algorithm, uses 1024-bit asymmetric keys, and was developed and formerly classified at the secret level by the NSA.

Security Association Authentication Protocol (SAAP) is a distracter.

Reference(s) used for this question:

SHIREY, Robert W., RFC2828: Internet Security Glossary, may 2000.

A one-way hash provides which of the following?

Confidentiality

Availability

Integrity

Authentication

A one-way hash is a function that takes a variable-length string a message, and compresses and transforms it into a fixed length value referred to as a hash value. It provides integrity, but no confidentiality, availability or authentication.

Source: WALLHOFF, John, CBK#5 Cryptography (CISSP Study Guide), April 2002 (page 5).

The Clipper Chip utilizes which concept in public key cryptography?

Substitution

Key Escrow

An undefined algorithm

Super strong encryption

The Clipper chip is a chipset that was developed and promoted by the U.S. Government as an encryption device to be adopted by telecommunications companies for voice transmission. It was announced in 1993 and by 1996 was entirely defunct.

The heart of the concept was key escrow. In the factory, any new telephone or other device with a Clipper chip would be given a "cryptographic key", that would then be provided to the government in "escrow". If government agencies "established their authority" to listen to a communication, then the password would be given to those government agencies, who could then decrypt all data transmitted by that particular telephone.

The CISSP Prep Guide states, "The idea is to divide the key into two parts, and to escrow two portions of the key with two separate 'trusted' organizations. Then, law enforcement officals, after obtaining a court order, can retreive the two pieces of the key from the organizations and decrypt the message."

References:

http://en.wikipedia.org/wiki/Clipper_Chip

and

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, John Wiley & Sons, 2001, page 166.

What attribute is included in a X.509-certificate?

Distinguished name of the subject

Telephone number of the department

secret key of the issuing CA

the key pair of the certificate holder

RFC 2459 : Internet X.509 Public Key Infrastructure Certificate and CRL Profile; GUTMANN, P., X.509 style guide; SMITH, Richard E., Internet Cryptography, 1997, Addison-Wesley Pub Co.

Which of the following answers is described as a random value used in cryptographic algorithms to ensure that patterns are not created during the encryption process?

IV - Initialization Vector

Stream Cipher

OTP - One Time Pad

Ciphertext

The basic power in cryptography is randomness. This uncertainty is why encrypted data is unusable to someone without the key to decrypt.

Initialization Vectors are a used with encryption keys to add an extra layer of randomness to encrypted data. If no IV is used the attacker can possibly break the keyspace because of patterns resulting in the encryption process. Implementation such as DES in Code Book Mode (CBC) would allow frequency analysis attack to take place.

In cryptography, an initialization vector (IV) or starting variable (SV)is a fixed-size input to a cryptographic primitive that is typically required to be random or pseudorandom. Randomization is crucial for encryption schemes to achieve semantic security, a property whereby repeated usage of the scheme under the same key does not allow an attacker to infer relationships between segments of the encrypted message. For block ciphers, the use of an IV is described by so-called modes of operation. Randomization is also required for other primitives, such as universal hash functions and message authentication codes based thereon.

It is define by TechTarget as:

An initialization vector (IV) is an arbitrary number that can be used along with a secret key for data encryption. This number, also called a nonce, is employed only one time in any session.

The use of an IV prevents repetition in data encryption, making it more difficult for a hacker using a dictionary attack to find patterns and break a cipher. For example, a sequence might appear twice or more within the body of a message. If there are repeated sequences in encrypted data, an attacker could assume that the corresponding sequences in the message were also identical. The IV prevents the appearance of corresponding duplicate character sequences in the ciphertext.

The following answers are incorrect:

- Stream Cipher: This isn't correct. A stream cipher is a symmetric key cipher where plaintext digits are combined with pseudorandom key stream to product cipher text.

- OTP - One Time Pad: This isn't correct but OTP is made up of random values used as key material. (Encryption key) It is considered by most to be unbreakable but must be changed with a new key after it is used which makes it impractical for common use.

- Ciphertext: Sorry, incorrect answer. Ciphertext is basically text that has been encrypted with key material (Encryption key)

The following reference(s) was used to create this question:

For more details on this TOPIC and other QUESTION NO: s of the Security+ CBK, subscribe to our Holistic Computer Based Tutorial (CBT) at http://www.cccure.tv

and

whatis.techtarget.com/definition/initialization-vector-IV

and

en.wikipedia.org/wiki/Initialization_vector

Which of the following is NOT a property of the Rijndael block cipher algorithm?

The key sizes must be a multiple of 32 bits

Maximum block size is 256 bits

Maximum key size is 512 bits

The key size does not have to match the block size

The above statement is NOT true and thus the correct answer. The maximum key size on Rijndael is 256 bits.

There are some differences between Rijndael and the official FIPS-197 specification for AES.

Rijndael specification per se is specified with block and key sizes that must be a multiple of 32 bits, both with a minimum of 128 and a maximum of 256 bits. Namely, Rijndael allows for both key and block sizes to be chosen independently from the set of { 128, 160, 192, 224, 256 } bits. (And the key size does not in fact have to match the block size).

However, FIPS-197 specifies that the block size must always be 128 bits in AES, and that the key size may be either 128, 192, or 256 bits. Therefore AES-128, AES-192, and AES-256 are actually:

Key Size (bits) Block Size (bits)

AES-128 128 128

AES-192 192 128

AES-256 256 128

So in short:

Rijndael and AES differ only in the range of supported values for the block length and cipher key length.

For Rijndael, the block length and the key length can be independently specified to any multiple of 32 bits, with a minimum of 128 bits, and a maximum of 256 bits.

AES fixes the block length to 128 bits, and supports key lengths of 128, 192 or 256 bits only.

References used for this question:

http://blogs.msdn.com/b/shawnfa/archive/2006/10/09/the-differences-between-rijndael-and-aes.aspx

and

http://csrc.nist.gov/CryptoToolkit/aes/rijndael/Rijndael.pdf

Where parties do not have a shared secret and large quantities of sensitive information must be passed, the most efficient means of transferring information is to use Hybrid Encryption Methods. What does this mean?

Use of public key encryption to secure a secret key, and message encryption using the secret key.

Use of the recipient's public key for encryption and decryption based on the recipient's private key.

Use of software encryption assisted by a hardware encryption accelerator.

Use of elliptic curve encryption.

A Public Key is also known as an asymmetric algorithm and the use of a secret key would be a symmetric algorithm.

The following answers are incorrect:

Use of the recipient's public key for encryption and decryption based on the recipient's private key. Is incorrect this would be known as an asymmetric algorithm.

Use of software encryption assisted by a hardware encryption accelerator. This is incorrect, it is a distractor.

Use of Elliptic Curve Encryption. Is incorrect this would use an asymmetric algorithm.

What size is an MD5 message digest (hash)?

128 bits

160 bits

256 bits

128 bytes

MD5 is a one-way hash function producing a 128-bit message digest from the input message, through 4 rounds of transformation. MD5 is specified as an Internet Standard (RFC1312).

Reference(s) used for this question:

TIPTON, Hal, (ISC)2, Introduction to the CISSP Exam presentation.

What is the primary role of smartcards in a PKI?

Transparent renewal of user keys

Easy distribution of the certificates between the users

Fast hardware encryption of the raw data

Tamper resistant, mobile storage and application of private keys of the users

In what type of attack does an attacker try, from several encrypted messages, to figure out the key used in the encryption process?

Known-plaintext attack

Ciphertext-only attack

Chosen-Ciphertext attack

Plaintext-only attack

In a ciphertext-only attack, the attacker has the ciphertext of several messages encrypted with the same encryption algorithm. Its goal is to discover the plaintext of the messages by figuring out the key used in the encryption process. In a known-plaintext attack, the attacker has the plaintext and the ciphertext of one or more messages. In a chosen-ciphertext attack, the attacker can chose the ciphertext to be decrypted and has access to the resulting plaintext.

Source: HARRIS, Shon, All-In-One CISSP Certification Exam Guide, McGraw-Hill/Osborne, 2002, Chapter 8: Cryptography (page 578).

The RSA Algorithm uses which mathematical concept as the basis of its encryption?

Geometry

16-round ciphers

PI (3.14159...)

Two large prime numbers

Source: TIPTON, et. al, Official (ISC)2 Guide to the CISSP CBK, 2007 edition, page 254.

And from the RSA web site, http://www.rsa.com/rsalabs/node.asp?id=2214 :

The RSA cryptosystem is a public-key cryptosystem that offers both encryption and digital signatures (authentication). Ronald Rivest, Adi Shamir, and Leonard Adleman developed the RSA system in 1977 [RSA78]; RSA stands for the first letter in each of its inventors' last names.

The RSA algorithm works as follows: take two large primes, p and q, and compute their product n = pq; n is called the modulus. Choose a number, e, less than n and relatively prime to (p-1)(q-1), which means e and (p-1)(q-1) have no common factors except 1. Find another number d such that (ed - 1) is divisible by (p-1)(q-1). The values e and d are called the public and private exponents, respectively. The public key is the pair (n, e); the private key is (n, d). The factors p and q may be destroyed or kept with the private key.

It is currently difficult to obtain the private key d from the public key (n, e). However if one could factor n into p and q, then one could obtain the private key d. Thus the security of the RSA system is based on the assumption that factoring is difficult. The discovery of an easy method of factoring would "break" RSA (see Question 3.1.3 and Question 2.3.3).

Here is how the RSA system can be used for encryption and digital signatures (in practice, the actual use is slightly different; see Questions 3.1.7 and 3.1.8):

Encryption

Suppose Alice wants to send a message m to Bob. Alice creates the ciphertext c by exponentiating: c = me mod n, where e and n are Bob's public key. She sends c to Bob. To decrypt, Bob also exponentiates: m = cd mod n; the relationship between e and d ensures that Bob correctly recovers m. Since only Bob knows d, only Bob can decrypt this message.

Digital Signature

Suppose Alice wants to send a message m to Bob in such a way that Bob is assured the message is both authentic, has not been tampered with, and from Alice. Alice creates a digital signature s by exponentiating: s = md mod n, where d and n are Alice's private key. She sends m and s to Bob. To verify the signature, Bob exponentiates and checks that the message m is recovered: m = se mod n, where e and n are Alice's public key.

Thus encryption and authentication take place without any sharing of private keys: each person uses only another's public key or their own private key. Anyone can send an encrypted message or verify a signed message, but only someone in possession of the correct private key can decrypt or sign a message.

Which protocol makes USE of an electronic wallet on a customer's PC and sends encrypted credit card information to merchant's Web server, which digitally signs it and sends it on to its processing bank?

SSH ( Secure Shell)

S/MIME (Secure MIME)

SET (Secure Electronic Transaction)

SSL (Secure Sockets Layer)

As protocol was introduced by Visa and Mastercard to allow for more credit card transaction possibilities. It is comprised of three different pieces of software, running on the customer's PC (an electronic wallet), on the merchant's Web server and on the payment server of the merchant's bank. The credit card information is sent by the customer to the merchant's Web server, but it does not open it and instead digitally signs it and sends it to its bank's payment server for processing.

The following answers are incorrect because :

SSH (Secure Shell) is incorrect as it functions as a type of tunneling mechanism that provides terminal like access to remote computers.

S/MIME is incorrect as it is a standard for encrypting and digitally signing electronic mail and for providing secure data transmissions.

SSL is incorrect as it uses public key encryption and provides data encryption, server authentication, message integrity, and optional client authentication.

Reference : Shon Harris AIO v3 , Chapter-8: Cryptography , Page : 667-669

Which of the following encryption methods is known to be unbreakable?

Symmetric ciphers.

DES codebooks.

One-time pads.

Elliptic Curve Cryptography.

A One-Time Pad uses a keystream string of bits that is generated completely at random that is used only once. Because it is used only once it is considered unbreakable.

The following answers are incorrect:

Symmetric ciphers. This is incorrect because a Symmetric Cipher is created by substitution and transposition. They can and have been broken

DES codebooks. This is incorrect because Data Encryption Standard (DES) has been broken, it was replaced by Advanced Encryption Standard (AES).

Elliptic Curve Cryptography. This is incorrect because Elliptic Curve Cryptography or ECC is typically used on wireless devices such as cellular phones that have small processors. Because of the lack of processing power the keys used at often small. The smaller the key, the easier it is considered to be breakable. Also, the technology has not been around long enough or tested thourough enough to be considered truly unbreakable.

Which of the following service is not provided by a public key infrastructure (PKI)?

Access control

Integrity

Authentication

Reliability

A Public Key Infrastructure (PKI) provides confidentiality, access control, integrity, authentication and non-repudiation.

It does not provide reliability services.

Reference(s) used for this question:

TIPTON, Hal, (ISC)2, Introduction to the CISSP Exam presentation.

Which of the following statements pertaining to stream ciphers is correct?

A stream cipher is a type of asymmetric encryption algorithm.

A stream cipher generates what is called a keystream.

A stream cipher is slower than a block cipher.

A stream cipher is not appropriate for hardware-based encryption.

A stream cipher is a type of symmetric encryption algorithm that operates on continuous streams of plain text and is appropriate for hardware-based encryption.

Stream ciphers can be designed to be exceptionally fast, much faster than any block cipher. A stream cipher generates what is called a keystream (a sequence of bits used as a key).

Stream ciphers can be viewed as approximating the action of a proven unbreakable cipher, the one-time pad (OTP), sometimes known as the Vernam cipher. A one-time pad uses a keystream of completely random digits. The keystream is combined with the plaintext digits one at a time to form the ciphertext. This system was proved to be secure by Claude Shannon in 1949. However, the keystream must be (at least) the same length as the plaintext, and generated completely at random. This makes the system very cumbersome to implement in practice, and as a result the one-time pad has not been widely used, except for the most critical applications.

A stream cipher makes use of a much smaller and more convenient key — 128 bits, for example. Based on this key, it generates a pseudorandom keystream which can be combined with the plaintext digits in a similar fashion to the one-time pad. However, this comes at a cost: because the keystream is now pseudorandom, and not truly random, the proof of security associated with the one-time pad no longer holds: it is quite possible for a stream cipher to be completely insecure if it is not implemented properly as we have seen with the Wired Equivalent Privacy (WEP) protocol.

Encryption is accomplished by combining the keystream with the plaintext, usually with the bitwise XOR operation.

Source: DUPUIS, Clement, CISSP Open Study Guide on domain 5, cryptography, April 1999.

More details can be obtained on Stream Ciphers in RSA Security's FAQ on Stream Ciphers.

PGP uses which of the following to encrypt data?

An asymmetric encryption algorithm

A symmetric encryption algorithm

A symmetric key distribution system

An X.509 digital certificate

Notice that the question specifically asks what PGP uses to encrypt For this, PGP uses an symmetric key algorithm. PGP then uses an asymmetric key algorithm to encrypt the session key and then send it securely to the receiver. It is an hybrid system where both types of ciphers are being used for different purposes.

Whenever a question talks about the bulk of the data to be sent, Symmetric is always best to choice to use because of the inherent speed within Symmetric Ciphers. Asymmetric ciphers are 100 to 1000 times slower than Symmetric Ciphers.

The other answers are not correct because:

"An asymmetric encryption algorithm" is incorrect because PGP uses a symmetric algorithm to encrypt data.

"A symmetric key distribution system" is incorrect because PGP uses an asymmetric algorithm for the distribution of the session keys used for the bulk of the data.

"An X.509 digital certificate" is incorrect because PGP does not use X.509 digital certificates to encrypt the data, it uses a session key to encrypt the data.

References:

Official ISC2 Guide page: 275

All in One Third Edition page: 664 - 665

Which of the following is not an example of a block cipher?

Skipjack

IDEA

Blowfish

RC4

RC4 is a proprietary, variable-key-length stream cipher invented by Ron Rivest for RSA Data Security, Inc. Skipjack, IDEA and Blowfish are examples of block ciphers.

Source: SHIREY, Robert W., RFC2828: Internet Security Glossary, may 2000.

Which of the following keys has the SHORTEST lifespan?

Secret key

Public key

Session key

Private key

As session key is a symmetric key that is used to encrypt messages between two users. A session key is only good for one communication session between users.

For example , If Tanya has a symmetric key that she uses to encrypt messages between Lance and herself all the time , then this symmetric key would not be regenerated or changed. They would use the same key every time they communicated using encryption. However , using the same key repeatedly increases the chances of the key being captured and the secure communication being compromised. If , on the other hand , a new symmetric key were generated each time Lance and Tanya wanted to communicate , it would be used only during their dialog and then destroyed. if they wanted to communicate and hour later , a new session key would be created and shared.

The other answers are not correct because :

Public Key can be known to anyone.

Private Key must be known and used only by the owner.

Secret Keys are also called as Symmetric Keys, because this type of encryption relies on each user to keep the key a secret and properly protected.

REFERENCES:

SHON HARRIS , ALL IN ONE THIRD EDITION : Chapter 8 : Cryptography , Page : 619-620

The Data Encryption Standard (DES) encryption algorithm has which of the following characteristics?

64 bits of data input results in 56 bits of encrypted output

128 bit key with 8 bits used for parity

64 bit blocks with a 64 bit total key length

56 bits of data input results in 56 bits of encrypted output

DES works with 64 bit blocks of text using a 64 bit key (with 8 bits used for parity, so the effective key length is 56 bits).

Some people are getting the Key Size and the Block Size mixed up. The block size is usually a specific length. For example DES uses block size of 64 bits which results in 64 bits of encrypted data for each block. AES uses a block size of 128 bits, the block size on AES can only be 128 as per the published standard FIPS-197.

A DES key consists of 64 binary digits ("0"s or "1"s) of which 56 bits are randomly generated and used directly by the algorithm. The other 8 bits, which are not used by the algorithm, may be used for error detection. The 8 error detecting bits are set to make the parity of each 8-bit byte of the key odd, i.e., there is an odd number of "1"s in each 8-bit byte1. Authorized users of encrypted computer data must have the key that was used to encipher the data in order to decrypt it.

IN CONTRAST WITH AES

The input and output for the AES algorithm each consist of sequences of 128 bits (digits with values of 0 or 1). These sequences will sometimes be referred to as blocks and the number of bits they contain will be referred to as their length. The Cipher Key for the AES algorithm is a sequence of 128, 192 or 256 bits. Other input, output and Cipher Key lengths are not permitted by this standard.

The Advanced Encryption Standard (AES) specifies the Rijndael algorithm, a symmetric block cipher that can process data blocks of 128 bits, using cipher keys with lengths of 128, 192, and 256 bits. Rijndael was designed to handle additional block sizes and key lengths, however they are not adopted in the AES standard.

The AES algorithm may be used with the three different key lengths indicated above, and therefore these different “flavors” may be referred to as “AES-128”, “AES-192”, and “AES-256”.

The other answers are not correct because:

"64 bits of data input results in 56 bits of encrypted output" is incorrect because while DES does work with 64 bit block input, it results in 64 bit blocks of encrypted output.

"128 bit key with 8 bits used for parity" is incorrect because DES does not ever use a 128 bit key.

"56 bits of data input results in 56 bits of encrypted output" is incorrect because DES always works with 64 bit blocks of input/output, not 56 bits.

Reference(s) used for this question:

Official ISC2 Guide to the CISSP CBK, Second Edition, page: 336-343

http://csrc.nist.gov/publications/fips/fips197/fips-197.pdf

http://csrc.nist.gov/publications/fips/fips46-3/fips46-3.pdf

Which of the following terms can be described as the process to conceal data into another file or media in a practice known as security through obscurity?

Steganography

ADS - Alternate Data Streams

Encryption

NTFS ADS

It is the art and science of encoding hidden messages in such a way that no one, apart from the sender and intended recipient, suspects the existence of the message or could claim there is a message.

It is a form of security through obscurity.

The word steganography is of Greek origin and means "concealed writing." It combines the Greek words steganos (στεγανός), meaning "covered or protected," and graphei (γραφή) meaning "writing."

The first recorded use of the term was in 1499 by Johannes Trithemius in his Steganographia, a treatise on cryptography and steganography, disguised as a book on magic. Generally, the hidden messages will appear to be (or be part of) something else: images, articles, shopping lists, or some other cover text. For example, the hidden message may be in invisible ink between the visible lines of a private letter.

The advantage of steganography over cryptography alone is that the intended secret message does not attract attention to itself as an object of scrutiny. Plainly visible encrypted messages, no matter how unbreakable, will arouse interest, and may in themselves be incriminating in countries where encryption is illegal. Thus, whereas cryptography is the practice of protecting the contents of a message alone, steganography is concerned with concealing the fact that a secret message is being sent, as well as concealing the contents of the message.

It is sometimes referred to as Hiding in Plain Sight. This image of trees blow contains in it another image of a cat using Steganography.

ADS Tree with Cat inside

This image below is hidden in the picture of the trees above:

Hidden Kitty

As explained here the image is hidden by removing all but the two least significant bits of each color component and subsequent normalization.

ABOUT MSF and LSF

One of the common method to perform steganography is by hiding bits within the Least Significant Bits of a media (LSB) or what is sometimes referred to as Slack Space. By modifying only the least significant bit, it is not possible to tell if there is an hidden message or not looking at the picture or the media. If you would change the Most Significant Bits (MSB) then it would be possible to view or detect the changes just by looking at the picture. A person can perceive only up to 6 bits of depth, bit that are changed past the first sixth bit of the color code would be undetectable to a human eye.

If we make use of a high quality digital picture, we could hide six bits of data within each of the pixel of the image. You have a color code for each pixel composed of a Red, Green, and Blue value. The color code is 3 sets of 8 bits each for each of the color. You could change the last two bit to hide your data. See below a color code for one pixel in binary format. The bits below are not real they are just example for illustration purpose:

RED GREEN BLUE

0101 0101 1100 1011 1110 0011

MSB LSB MSB LSB MSB LSB

Let's say that I would like to hide the letter A uppercase within the pixels of the picture. If we convert the letter "A" uppercase to a decimal value it would be number 65 within the ASCII table , in binary format the value 65 would translet to 01000001

You can break the 8 bits of character A uppercase in group of two bits as follow: 01 00 00 01

Using the pixel above we will hide those bits within the last two bits of each of the color as follow:

RED GREEN BLUE

0101 0101 1100 1000 1110 0000

MSB LSB MSB LSB MSB LSB

As you can see above, the last two bits of RED was already set to the proper value of 01, then we move to the GREEN value and we changed the last two bit from 11 to 00, and finally we changed the last two bits of blue to 00. One pixel allowed us to hide 6 bits of data. We would have to use another pixel to hide the remaining two bits.

The following answers are incorrect:

- ADS - Alternate Data Streams: This is almost correct but ADS is different from steganography in that ADS hides data in streams of communications or files while Steganography hides data in a single file.

- Encryption: This is almost correct but Steganography isn't exactly encryption as much as using space in a file to store another file.

- NTFS ADS: This is also almost correct in that you're hiding data where you have space to do so. NTFS, or New Technology File System common on Windows computers has a feature where you can hide files where they're not viewable under normal conditions. Tools are required to uncover the ADS-hidden files.

The following reference(s) was used to create this question:

The CCCure Security+ Holistic Tutorial at http://www.cccure.tv

and

Steganography tool

and

Which of the following packets should NOT be dropped at a firewall protecting an organization's internal network?

Inbound packets with Source Routing option set

Router information exchange protocols

Inbound packets with an internal address as the source IP address

Outbound packets with an external destination IP address

Normal outbound traffic has an internal source IP address and an external destination IP address.

Traffic with an internal source IP address should only come from an internal interface. Such packets coming from an external interface should be dropped.

Packets with the source-routing option enabled usually indicates a network intrusion attempt.

Router information exchange protocols like RIP and OSPF should be dropped to avoid having internal routing equipment being reconfigured by external agents.

Source: STREBE, Matthew and PERKINS, Charles, Firewalls 24seven, Sybex 2000, Chapter 10: The Perfect Firewall.

Secure Shell (SSH-2) supports authentication, compression, confidentiality, and integrity, SSH is commonly used as a secure alternative to all of the following protocols below except:

telnet

rlogin

RSH

HTTPS

HTTPS is used for secure web transactions and is not commonly replaced by SSH.

Users often want to log on to a remote computer. Unfortunately, most early implementations to meet that need were designed for a trusted network. Protocols/programs, such as TELNET, RSH, and rlogin, transmit unencrypted over the network, which allows traffic to be easily intercepted. Secure shell (SSH) was designed as an alternative to the above insecure protocols and allows users to securely access resources on remote computers over an encrypted tunnel. SSH’s services include remote log-on, file transfer, and command execution. It also supports port forwarding, which redirects other protocols through an encrypted SSH tunnel. Many users protect less secure traffic of protocols, such as X Windows and VNC (virtual network computing), by forwarding them through a SSH tunnel. The SSH tunnel protects the integrity of communication, preventing session hijacking and other man-in-the-middle attacks. Another advantage of SSH over its predecessors is that it supports strong authentication. There are several alternatives for SSH clients to authenticate to a SSH server, including passwords and digital certificates. Keep in mind that authenticating with a password is still a significant improvement over the other protocols because the password is transmitted encrypted.

The following were wrong answers:

telnet is an incorrect choice. SSH is commonly used as an more secure alternative to telnet. In fact Telnet should not longer be used today.

rlogin is and incorrect choice. SSH is commonly used as a more secure alternative to rlogin.

RSH is an incorrect choice. SSH is commonly used as a more secure alternative to RSH.

Reference(s) used for this question:

Hernandez CISSP, Steven (2012-12-21). Official (ISC)2 Guide to the CISSP CBK, Third Edition ((ISC)2 Press) (Kindle Locations 7077-7088). Auerbach Publications. Kindle Edition.

Which of the following countermeasures would be the most appropriate to prevent possible intrusion or damage from wardialing attacks?

Monitoring and auditing for such activity

Require user authentication

Making sure only necessary phone numbers are made public

Using completely different numbers for voice and data accesses

Knowlege of modem numbers is a poor access control method as an attacker can discover modem numbers by dialing all numbers in a range. Requiring user authentication before remote access is granted will help in avoiding unauthorized access over a modem line.

"Monitoring and auditing for such activity" is incorrect. While monitoring and auditing can assist in detecting a wardialing attack, they do not defend against a successful wardialing attack.

"Making sure that only necessary phone numbers are made public" is incorrect. Since a wardialing attack blindly calls all numbers in a range, whether certain numbers in the range are public or not is irrelevant.

"Using completely different numbers for voice and data accesses" is incorrect. Using different number ranges for voice and data access might help prevent an attacker from stumbling across the data lines while wardialing the public voice number range but this is not an adequate countermeaure.

References:

CBK, p. 214

AIO3, p. 534-535

What is malware that can spread itself over open network connections?

Worm

Rootkit

Adware

Logic Bomb

Computer worms are also known as Network Mobile Code, or a virus-like bit of code that can replicate itself over a network, infecting adjacent computers.

A computer worm is a standalone malware computer program that replicates itself in order to spread to other computers. Often, it uses a computer network to spread itself, relying on security failures on the target computer to access it. Unlike a computer virus, it does not need to attach itself to an existing program. Worms almost always cause at least some harm to the network, even if only by consuming bandwidth, whereas viruses almost always corrupt or modify files on a targeted computer.

A notable example is the SQL Slammer computer worm that spread globally in ten minutes on January 25, 2003. I myself came to work that day as a software tester and found all my SQL servers infected and actively trying to infect other computers on the test network.

A patch had been released a year prior by Microsoft and if systems were not patched and exposed to a 376 byte UDP packet from an infected host then system would become compromised.

Ordinarily, infected computers are not to be trusted and must be rebuilt from scratch but the vulnerability could be mitigated by replacing a single vulnerable dll called sqlsort.dll.

Replacing that with the patched version completely disabled the worm which really illustrates to us the importance of actively patching our systems against such network mobile code.

The following answers are incorrect:

- Rootkit: Sorry, this isn't correct because a rootkit isn't ordinarily classified as network mobile code like a worm is. This isn't to say that a rootkit couldn't be included in a worm, just that a rootkit isn't usually classified like a worm. A rootkit is a stealthy type of software, typically malicious, designed to hide the existence of certain processes or programs from normal methods of detection and enable continued privileged access to a computer. The term rootkit is a concatenation of "root" (the traditional name of the privileged account on Unix operating systems) and the word "kit" (which refers to the software components that implement the tool). The term "rootkit" has negative connotations through its association with malware.

- Adware: Incorrect answer. Sorry but adware isn't usually classified as a worm. Adware, or advertising-supported software, is any software package which automatically renders advertisements in order to generate revenue for its author. The advertisements may be in the user interface of the software or on a screen presented to the user during the installation process. The functions may be designed to analyze which Internet sites the user visits and to present advertising pertinent to the types of goods or services featured there. The term is sometimes used to refer to software that displays unwanted advertisements.

- Logic Bomb: Logic bombs like adware or rootkits could be spread by worms if they exploit the right service and gain root or admin access on a computer.

The following reference(s) was used to create this question:

The CCCure CompTIA Holistic Security+ Tutorial and CBT

and

http://en.wikipedia.org/wiki/Rootkit

and

http://en.wikipedia.org/wiki/Computer_worm

and

Crackers today are MOST often motivated by their desire to:

Help the community in securing their networks.

Seeing how far their skills will take them.

Getting recognition for their actions.

Gaining Money or Financial Gains.

A few years ago the best choice for this question would have been seeing how far their skills can take them. Today this has changed greatly, most crimes committed are financially motivated.

Profit is the most widespread motive behind all cybercrimes and, indeed, most crimes- everyone wants to make money. Hacking for money or for free services includes a smorgasbord of crimes such as embezzlement, corporate espionage and being a “hacker for hire”. Scams are easier to undertake but the likelihood of success is much lower. Money-seekers come from any lifestyle but those with persuasive skills make better con artists in the same way as those who are exceptionally tech-savvy make better “hacks for hire”.

"White hats" are the security specialists (as opposed to Black Hats) interested in helping the community in securing their networks. They will test systems and network with the owner authorization.

A Black Hat is someone who uses his skills for offensive purpose. They do not seek authorization before they attempt to comprise the security mechanisms in place.

"Grey Hats" are people who sometimes work as a White hat and other times they will work as a "Black Hat", they have not made up their mind yet as to which side they prefer to be.

The following are incorrect answers:

All the other choices could be possible reasons but the best one today is really for financial gains.

References used for this question:

http://library.thinkquest.org/04oct/00460/crimeMotives.html

and

http://www.informit.com/articles/article.aspx?p=1160835

and

http://www.aic.gov.au/documents/1/B/A/%7B1BA0F612-613A-494D-B6C5-06938FE8BB53%7Dhtcb006.pdf

Java is not:

Object-oriented.

Distributed.

Architecture Specific.

Multithreaded.

JAVA was developed so that the same program could be executed on multiple hardware and operating system platforms, it is not Architecture Specific.

The following answers are incorrect:

Object-oriented. Is not correct because JAVA is object-oriented. It should use the object-oriented programming methodology.

Distributed. Is incorrect because JAVA was developed to be able to be distrubuted, run on multiple computer systems over a network.

Multithreaded. Is incorrect because JAVA is multi-threaded that is calls to subroutines as is the case with object-oriented programming.

A virus is a program that can replicate itself on a system but not necessarily spread itself by network connections.

The Diffie-Hellman algorithm is primarily used to provide which of the following?

Confidentiality

Key Agreement

Integrity

Non-repudiation

Diffie and Hellman describe a means for two parties to agree upon a shared secret in such a way that the secret will be unavailable to eavesdroppers. This secret may then be converted into cryptographic keying material for other (symmetric) algorithms. A large number of minor variants of this process exist. See RFC 2631 Diffie-Hellman Key Agreement Method for more details.

In 1976, Diffie and Hellman were the first to introduce the notion of public key cryptography, requiring a system allowing the exchange of secret keys over non-secure channels. The Diffie-Hellman algorithm is used for key exchange between two parties communicating with each other, it cannot be used for encrypting and decrypting messages, or digital signature.

Diffie and Hellman sought to address the issue of having to exchange keys via courier and other unsecure means. Their efforts were the FIRST asymmetric key agreement algorithm. Since the Diffie-Hellman algorithm cannot be used for encrypting and decrypting it cannot provide confidentiality nor integrity. This algorithm also does not provide for digital signature functionality and thus non-repudiation is not a choice.

NOTE: The DH algorithm is susceptible to man-in-the-middle attacks.

KEY AGREEMENT VERSUS KEY EXCHANGE

A key exchange can be done multiple way. It can be done in person, I can generate a key and then encrypt the key to get it securely to you by encrypting it with your public key. A Key Agreement protocol is done over a public medium such as the internet using a mathematical formula to come out with a common value on both sides of the communication link, without the ennemy being able to know what the common agreement is.

The following answers were incorrect:

All of the other choices were not correct choices

Reference(s) used for this question:

Shon Harris, CISSP All In One (AIO), 6th edition . Chapter 7, Cryptography, Page 812.

http://en.wikipedia.org/wiki/Diffie%E2%80%93Hellman_key_exchange

What do the ILOVEYOU and Melissa virus attacks have in common?

They are both denial-of-service (DOS) attacks.

They have nothing in common.

They are both masquerading attacks.

They are both social engineering attacks.

While a masquerading attack can be considered a type of social engineering, the Melissa and ILOVEYOU viruses are examples of masquerading attacks, even if it may cause some kind of denial of service due to the web server being flooded with messages. In this case, the receiver confidently opens a message coming from a trusted individual, only to find that the message was sent using the trusted party's identity.

Source: HARRIS, Shon, All-In-One CISSP Certification Exam Guide, McGraw-Hill/Osborne, 2002, Chapter 10: Law, Investigation, and Ethics (page 650).

Which of the following computer crime is MORE often associated with INSIDERS?

IP spoofing

Password sniffing

Data diddling

Denial of service (DOS)

It refers to the alteration of the existing data , most often seen before it is entered into an application.This type of crime is extremely common and can be prevented by using appropriate access controls and proper segregation of duties. It will more likely be perpetrated by insiders, who have access to data before it is processed.

The other answers are incorrect because :

IP Spoofing is not correct as the questions asks about the crime associated with the insiders. Spoofing is generally accomplished from the outside.

Password sniffing is also not the BEST answer as it requires a lot of technical knowledge in understanding the encryption and decryption process.

Denial of service (DOS) is also incorrect as most Denial of service attacks occur over the internet.

Reference : Shon Harris , AIO v3 , Chapter-10 : Law , Investigation & Ethics , Page : 758-760.

Which virus category has the capability of changing its own code, making it harder to detect by anti-virus software?

Stealth viruses

Polymorphic viruses

Trojan horses

Logic bombs

A polymorphic virus has the capability of changing its own code, enabling it to have many different variants, making it harder to detect by anti-virus software. The particularity of a stealth virus is that it tries to hide its presence after infecting a system. A Trojan horse is a set of unauthorized instructions that are added to or replacing a legitimate program. A logic bomb is a set of instructions that is initiated when a specific event occurs.

Source: HARRIS, Shon, All-In-One CISSP Certification Exam Guide, McGraw-Hill/Osborne, 2002, chapter 11: Application and System Development (page 786).

The high availability of multiple all-inclusive, easy-to-use hacking tools that do NOT require much technical knowledge has brought a growth in the number of which type of attackers?

Black hats

White hats

Script kiddies

Phreakers

As script kiddies are low to moderately skilled hackers using available scripts and tools to easily launch attacks against victims.

The other answers are incorrect because :

Black hats is incorrect as they are malicious , skilled hackers.

White hats is incorrect as they are security professionals.

Phreakers is incorrect as they are telephone/PBX (private branch exchange) hackers.

Reference : Shon Harris AIO v3 , Chapter 12: Operations security , Page : 830

Which of the following is immune to the effects of electromagnetic interference (EMI) and therefore has a much longer effective usable length?

Fiber Optic cable

Coaxial cable

Twisted Pair cable

Axial cable

Fiber Optic cable is immune to the effects of electromagnetic interference (EMI) and therefore has a much longer effective usable length (up to two kilometers in some cases).

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 72.

Which of the following statements pertaining to PPTP (Point-to-Point Tunneling Protocol) is incorrect?

PPTP allow the tunnelling of any protocols that can be carried within PPP.

PPTP does not provide strong encryption.

PPTP does not support any token-based authentication method for users.

PPTP is derived from L2TP.

PPTP is an encapsulation protocol based on PPP that works at OSI layer 2 (Data Link) and that enables a single point-to-point connection, usually between a client and a server.

While PPTP depends on IP to establish its connection.

As currently implemented, PPTP encapsulates PPP packets using a modified version of the generic routing encapsulation (GRE) protocol, which gives PPTP to the flexibility of handling protocols other than IP, such as IPX and NETBEUI over IP networks.

PPTP does have some limitations:

It does not provide strong encryption for protecting data, nor does it support any token-based methods for authenticating users.

L2TP is derived from L2F and PPTP, not the opposite.

Which of the following is an IP address that is private (i.e. reserved for internal networks, and not a valid address to use on the Internet)?

192.168.42.5

192.166.42.5

192.175.42.5

192.1.42.5

This is a valid Class C reserved address. For Class C, the reserved addresses are 192.168.0.0 - 192.168.255.255.

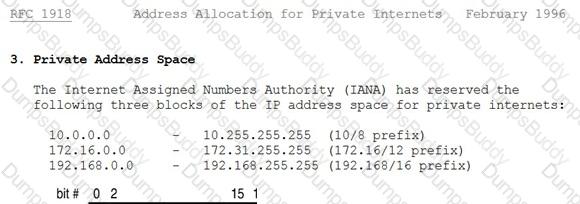

The private IP address ranges are defined within RFC 1918:

RFC 1918 private ip address range

The following answers are incorrect:

192.166.42.5 Is incorrect because it is not a Class C reserved address.

192.175.42.5 Is incorrect because it is not a Class C reserved address.

192.1.42.5 Is incorrect because it is not a Class C reserved address.

Which type of firewall can be used to track connectionless protocols such as UDP and RPC?

Stateful inspection firewalls

Packet filtering firewalls

Application level firewalls

Circuit level firewalls

Packets in a stateful inspection firewall are queued and then analyzed at all OSI layers, providing a more complete inspection of the data. By examining the state and context of the incoming data packets, it helps to track the protocols that are considered "connectionless", such as UDP-based applications and Remote Procedure Calls (RPC).

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, John Wiley & Sons, 2001, Chapter 3: Telecommunications and Network Security (page 91).

Which of the following rules appearing in an Internet firewall policy is inappropriate?

Source routing shall be disabled on all firewalls and external routers.

Firewalls shall be configured to transparently allow all outbound and inbound services.

Firewalls should fail to a configuration that denies all services, and require a firewall administrator to re-enable services after a firewall has failed.

Firewalls shall not accept traffic on its external interfaces that appear to be coming from internal network addresses.

Unless approved by the Network Services manager, all in-bound services shall be intercepted and processed by the firewall. Allowing unrestricted services inbound and outbound is certainly NOT recommended and very dangerous.

Pay close attention to the keyword: all

All of the other choices presented are recommended practices for a firewall policy.

Reference(s) used for this question:

GUTTMAN, Barbara & BAGWILL, Robert, NIST Special Publication 800-xx, Internet Security Policy: A Technical Guide, Draft Version, May 25, 2000 (page 78).

Which of the following service is a distributed database that translate host name to IP address to IP address to host name?

DNS

FTP

SSH

SMTP

The Domain Name System (DNS) is a hierarchical distributed naming system for computers, services, or any resource connected to the Internet or a private network. It associates information from domain names with each of the assigned entities. Most prominently, it translates easily memorized domain names to the numerical IP addresses needed for locating computer services and devices worldwide. The Domain Name System is an essential component of the functionality of the Internet. This article presents a functional description of the Domain Name System.

For your exam you should know below information general Internet terminology:

Network access point - Internet service providers access internet using net access point.A Network Access Point (NAP) was a public network exchange facility where Internet service providers (ISPs) connected with one another in peering arrangements. The NAPs were a key component in the transition from the 1990s NSFNET era (when many networks were government sponsored and commercial traffic was prohibited) to the commercial Internet providers of today. They were often points of considerable Internet congestion.

Internet Service Provider (ISP) - An Internet service provider (ISP) is an organization that provides services for accessing, using, or participating in the Internet. Internet service providers may be organized in various forms, such as commercial, community-owned, non-profit, or otherwise privately owned. Internet services typically provided by ISPs include Internet access, Internet transit, domain name registration, web hosting, co-location.

Telnet or Remote Terminal Control Protocol -A terminal emulation program for TCP/IP networks such as the Internet. The Telnet program runs on your computer and connects your PC to a server on the network. You can then enter commands through the Telnet program and they will be executed as if you were entering them directly on the server console. This enables you to control the server and communicate with other servers on the network. To start a Telnet session, you must log in to a server by entering a valid username and password. Telnet is a common way to remotely control Web servers.

Internet Link- Internet link is a connection between Internet users and the Internet service provider.

Secure Shell or Secure Socket Shell (SSH) - Secure Shell (SSH), sometimes known as Secure Socket Shell, is a UNIX-based command interface and protocol for securely getting access to a remote computer. It is widely used by network administrators to control Web and other kinds of servers remotely. SSH is actually a suite of three utilities - slogin, ssh, and scp - that are secure versions of the earlier UNIX utilities, rlogin, rsh, and rcp. SSH commands are encrypted and secure in several ways. Both ends of the client/server connection are authenticated using a digital certificate, and passwords are protected by being encrypted.

Domain Name System (DNS) - The Domain Name System (DNS) is a hierarchical distributed naming system for computers, services, or any resource connected to the Internet or a private network. It associates information from domain names with each of the assigned entities. Most prominently, it translates easily memorized domain names to the numerical IP addresses needed for locating computer services and devices worldwide. The Domain Name System is an essential component of the functionality of the Internet. This article presents a functional description of the Domain Name System.

File Transfer Protocol (FTP) - The File Transfer Protocol or FTP is a client/server application that is used to move files from one system to another. The client connects to the FTP server, authenticates and is given access that the server is configured to permit. FTP servers can also be configured to allow anonymous access by logging in with an email address but no password. Once connected, the client may move around between directories with commands available

Simple Mail Transport Protocol (SMTP) - SMTP (Simple Mail Transfer Protocol) is a TCP/IP protocol used in sending and receiving e-mail. However, since it is limited in its ability to queue messages at the receiving end, it is usually used with one of two other protocols, POP3 or IMAP, that let the user save messages in a server mailbox and download them periodically from the server. In other words, users typically use a program that uses SMTP for sending e-mail and either POP3 or IMAP for receiving e-mail. On Unix-based systems, send mail is the most widely-used SMTP server for e-mail. A commercial package, Send mail, includes a POP3 server. Microsoft Exchange includes an SMTP server and can also be set up to include POP3 support.

The following answers are incorrect:

SMTP - Simple Mail Transport Protocol (SMTP) - SMTP (Simple Mail Transfer Protocol) is a TCP/IP protocol used in sending and receiving e-mail. However, since it is limited in its ability to queue messages at the receiving end, it is usually used with one of two other protocols, POP3 or IMAP, that let the user save messages in a server mailbox and download them periodically from the server. In other words, users typically use a program that uses SMTP for sending e-mail and either POP3 or IMAP for receiving e-mail. On Unix-based systems, send mail is the most widely-used SMTP server for e-mail. A commercial package, Send mail, includes a POP3 server. Microsoft Exchange includes an SMTP server and can also be set up to include POP3 support.

FTP - The File Transfer Protocol or FTP is a client/server application that is used to move files from one system to another. The client connects to the FTP server, authenticates and is given access that the server is configured to permit. FTP servers can also be configured to allow anonymous access by logging in with an email address but no password. Once connected, the client may move around between directories with commands available

SSH - Secure Shell (SSH), sometimes known as Secure Socket Shell, is a UNIX-based command interface and protocol for securely getting access to a remote computer. It is widely used by network administrators to control Web and other kinds of servers remotely. SSH is actually a suite of three utilities - slogin, ssh, and scp - that are secure versions of the earlier UNIX utilities, rlogin, rsh, and rcp. SSH commands are encrypted and secure in several ways. Both ends of the client/server connection are authenticated using a digital certificate, and passwords are protected by being encrypted.

The following reference(s) were/was used to create this question:

CISA review manual 2014 page number 273 and 274

Remote Procedure Call (RPC) is a protocol that one program can use to request a service from a program located in another computer in a network. Within which OSI/ISO layer is RPC implemented?

Session layer

Transport layer

Data link layer

Network layer

The Answer: Session layer, which establishes, maintains and manages sessions and synchronization of data flow. Session layer protocols control application-to-application communications, which is what an RPC call is.

The following answers are incorrect:

Transport layer: The Transport layer handles computer-to computer communications, rather than application-to-application communications like RPC.

Data link Layer: The Data Link layer protocols can be divided into either Logical Link Control (LLC) or Media Access Control (MAC) sublayers. Protocols like SLIP, PPP, RARP and L2TP are at this layer. An application-to-application protocol like RPC would not be addressed at this layer.

Network layer: The Network Layer is mostly concerned with routing and addressing of information, not application-to-application communication calls such as an RPC call.

The following reference(s) were/was used to create this question:

The Remote Procedure Call (RPC) protocol is implemented at the Session layer, which establishes, maintains and manages sessions as well as synchronization of the data flow.

Source: Jason Robinett's CISSP Cram Sheet: domain2.

Source: Shon Harris AIO v3 pg. 423

Which type of attack involves hijacking a session between a host and a target by predicting the target's choice of an initial TCP sequence number?

IP spoofing attack

SYN flood attack

TCP sequence number attack

Smurf attack

A TCP sequence number attack exploits the communication session which was established between the target and the trusted host that initiated the session. It involves hijacking the session between the host and the target by predicting the target's choice of an initial TCP sequence number. An IP spoofing attack is used to convince a system that it is communication with a known entity that gives an intruder access. It involves modifying the source address of a packet for a trusted source's address. A SYN attack is when an attacker floods a system with connection requests but does not respond when the target system replies to those requests. A smurf attack occurs when an attacker sends a spoofed (IP spoofing) PING (ICMP ECHO) packet to the broadcast address of a large network (the bounce site). The modified packet containing the address of the target system, all devices on its local network respond with a ICMP REPLY to the target system, which is then saturated with those replies.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, John Wiley & Sons, 2001, Chapter 3: Telecommunications and Network Security (page 77).

Which of the following is needed for System Accountability?

Audit mechanisms.

Documented design as laid out in the Common Criteria.

Authorization.

Formal verification of system design.

Is a means of being able to track user actions. Through the use of audit logs and other tools the user actions are recorded and can be used at a later date to verify what actions were performed.

Accountability is the ability to identify users and to be able to track user actions.

The following answers are incorrect:

Documented design as laid out in the Common Criteria. Is incorrect because the Common Criteria is an international standard to evaluate trust and would not be a factor in System Accountability.

Authorization. Is incorrect because Authorization is granting access to subjects, just because you have authorization does not hold the subject accountable for their actions.

Formal verification of system design. Is incorrect because all you have done is to verify the system design and have not taken any steps toward system accountability.

References:

OIG CBK Glossary (page 778)

Knowledge-based Intrusion Detection Systems (IDS) are more common than:

Network-based IDS

Host-based IDS

Behavior-based IDS

Application-Based IDS

Knowledge-based IDS are more common than behavior-based ID systems.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 63.

Application-Based IDS - "a subset of HIDS that analyze what's going on in an application using the transaction log files of the application." Source: Official ISC2 CISSP CBK Review Seminar Student Manual Version 7.0 p. 87

Host-Based IDS - "an implementation of IDS capabilities at the host level. Its most significant difference from NIDS is intrusion detection analysis, and related processes are limited to the boundaries of the host." Source: Official ISC2 Guide to the CISSP CBK - p. 197

Network-Based IDS - "a network device, or dedicated system attached to the network, that monitors traffic traversing the network segment for which it is integrated." Source: Official ISC2 Guide to the CISSP CBK - p. 196

CISSP for dummies a book that we recommend for a quick overview of the 10 domains has nice and concise coverage of the subject:

Intrusion detection is defined as real-time monitoring and analysis of network activity and data for potential vulnerabilities and attacks in progress. One major limitation of current intrusion detection system (IDS) technologies is the requirement to filter false alarms lest the operator (system or security administrator) be overwhelmed with data. IDSes are classified in many different ways, including active and passive, network-based and host-based, and knowledge-based and behavior-based:

Active and passive IDS

An active IDS (now more commonly known as an intrusion prevention system — IPS) is a system that's configured to automatically block suspected attacks in progress without any intervention required by an operator. IPS has the advantage of providing real-time corrective action in response to an attack but has many disadvantages as well. An IPS must be placed in-line along a network boundary; thus, the IPS itself is susceptible to attack. Also, if false alarms and legitimate traffic haven't been properly identified and filtered, authorized users and applications may be improperly denied access. Finally, the IPS itself may be used to effect a Denial of Service (DoS) attack by intentionally flooding the system with alarms that cause it to block connections until no connections or bandwidth are available.

A passive IDS is a system that's configured only to monitor and analyze network traffic activity and alert an operator to potential vulnerabilities and attacks. It isn't capable of performing any protective or corrective functions on its own. The major advantages of passive IDSes are that these systems can be easily and rapidly deployed and are not normally susceptible to attack themselves.

Network-based and host-based IDS

A network-based IDS usually consists of a network appliance (or sensor) with a Network Interface Card (NIC) operating in promiscuous mode and a separate management interface. The IDS is placed along a network segment or boundary and monitors all traffic on that segment.

A host-based IDS requires small programs (or agents) to be installed on individual systems to be monitored. The agents monitor the operating system and write data to log files and/or trigger alarms. A host-based IDS can only monitor the individual host systems on which the agents are installed; it doesn't monitor the entire network.

Knowledge-based and behavior-based IDS

A knowledge-based (or signature-based) IDS references a database of previous attack profiles and known system vulnerabilities to identify active intrusion attempts. Knowledge-based IDS is currently more common than behavior-based IDS.

Advantages of knowledge-based systems include the following:

It has lower false alarm rates than behavior-based IDS.

Alarms are more standardized and more easily understood than behavior-based IDS.

Disadvantages of knowledge-based systems include these:

Signature database must be continually updated and maintained.

New, unique, or original attacks may not be detected or may be improperly classified.

A behavior-based (or statistical anomaly–based) IDS references a baseline or learned pattern of normal system activity to identify active intrusion attempts. Deviations from this baseline or pattern cause an alarm to be triggered.

Advantages of behavior-based systems include that they

Dynamically adapt to new, unique, or original attacks.

Are less dependent on identifying specific operating system vulnerabilities.

Disadvantages of behavior-based systems include

Higher false alarm rates than knowledge-based IDSes.

Usage patterns that may change often and may not be static enough to implement an effective behavior-based IDS.

Which of the following would assist the most in Host Based intrusion detection?

audit trails.

access control lists.

security clearances

host-based authentication

To assist in Intrusion Detection you would review audit logs for access violations.

The following answers are incorrect:

access control lists. This is incorrect because access control lists determine who has access to what but do not detect intrusions.

security clearances. This is incorrect because security clearances determine who has access to what but do not detect intrusions.

host-based authentication. This is incorrect because host-based authentication determine who have been authenticated to the system but do not dectect intrusions.

Which of the following Intrusion Detection Systems (IDS) uses a database of attacks, known system vulnerabilities, monitoring current attempts to exploit those vulnerabilities, and then triggers an alarm if an attempt is found?

Knowledge-Based ID System

Application-Based ID System

Host-Based ID System

Network-Based ID System

Knowledge-based Intrusion Detection Systems use a database of previous attacks and known system vulnerabilities to look for current attempts to exploit their vulnerabilities, and trigger an alarm if an attempt is found.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 87.

Application-Based ID System - "a subset of HIDS that analyze what's going on in an application using the transaction log files of the application." Source: Official ISC2 CISSP CBK Review Seminar Student Manual Version 7.0 p. 87

Host-Based ID System - "an implementation of IDS capabilities at the host level. Its most significant difference from NIDS is intrusion detection analysis, and related processes are limited to the boundaries of the host." Source: Official ISC2 Guide to the CISSP CBK - p. 197

Network-Based ID System - "a network device, or dedicated system attached to teh network, that monitors traffic traversing teh network segment for which it is integrated." Source: Official ISC2 Guide to the CISSP CBK - p. 196

Which of the following is an issue with signature-based intrusion detection systems?

Only previously identified attack signatures are detected.

Signature databases must be augmented with inferential elements.

It runs only on the windows operating system

Hackers can circumvent signature evaluations.

An issue with signature-based ID is that only attack signatures that are stored in their database are detected.

New attacks without a signature would not be reported. They do require constant updates in order to maintain their effectiveness.

Reference used for this question:

KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 49.

Which of the following tools is less likely to be used by a hacker?

l0phtcrack

Tripwire

OphCrack

John the Ripper

Tripwire is an integrity checking product, triggering alarms when important files (e.g. system or configuration files) are modified.

This is a tool that is not likely to be used by hackers, other than for studying its workings in order to circumvent it.

Other programs are password-cracking programs and are likely to be used by security administrators as well as by hackers. More info regarding Tripwire available on the Tripwire, Inc. Web Site.

NOTE:

The biggest competitor to the commercial version of Tripwire is the freeware version of Tripwire. You can get the Open Source version of Tripwire at the following URL: http://sourceforge.net/projects/tripwire/

What ensures that the control mechanisms correctly implement the security policy for the entire life cycle of an information system?

Accountability controls

Mandatory access controls

Assurance procedures

Administrative controls

Controls provide accountability for individuals accessing information. Assurance procedures ensure that access control mechanisms correctly implement the security policy for the entire life cycle of an information system.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, John Wiley & Sons, 2001, Chapter 2: Access control systems (page 33).

Attributable data should be:

always traced to individuals responsible for observing and recording the data

sometimes traced to individuals responsible for observing and recording the data

never traced to individuals responsible for observing and recording the data

often traced to individuals responsible for observing and recording the data

As per FDA data should be attributable, original, accurate, contemporaneous and legible. In an automated system attributability could be achieved by a computer system designed to identify individuals responsible for any input.

Source: U.S. Department of Health and Human Services, Food and Drug Administration, Guidance for Industry - Computerized Systems Used in Clinical Trials, April 1999, page 1.

In what way can violation clipping levels assist in violation tracking and analysis?

Clipping levels set a baseline for acceptable normal user errors, and violations exceeding that threshold will be recorded for analysis of why the violations occurred.

Clipping levels enable a security administrator to customize the audit trail to record only those violations which are deemed to be security relevant.

Clipping levels enable the security administrator to customize the audit trail to record only actions for users with access to user accounts with a privileged status.

Clipping levels enable a security administrator to view all reductions in security levels which have been made to user accounts which have incurred violations.

Companies can set predefined thresholds for the number of certain types of errors that will be allowed before the activity is considered suspicious. The threshold is a baseline for violation activities that may be normal for a user to commit before alarms are raised. This baseline is referred to as a clipping level.

The following are incorrect answers:

Clipping levels enable a security administrator to customize the audit trail to record only those violations which are deemed to be security relevant. This is not the best answer, you would not record ONLY security relevant violations, all violations would be recorded as well as all actions performed by authorized users which may not trigger a violation. This could allow you to indentify abnormal activities or fraud after the fact.

Clipping levels enable the security administrator to customize the audit trail to record only actions for users with access to user accounts with a privileged status. It could record all security violations whether the user is a normal user or a privileged user.

Clipping levels enable a security administrator to view all reductions in security levels which have been made to user accounts which have incurred violations. The keyword "ALL" makes this question wrong. It may detect SOME but not all of violations. For example, application level attacks may not be detected.

Reference(s) used for this question:

Harris, Shon (2012-10-18). CISSP All-in-One Exam Guide, 6th Edition (p. 1239). McGraw-Hill. Kindle Edition.

and

TIPTON, Hal, (ISC)2, Introduction to the CISSP Exam presentation.

Attributes that characterize an attack are stored for reference using which of the following Intrusion Detection System (IDS) ?

signature-based IDS

statistical anomaly-based IDS

event-based IDS

inferent-based IDS

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 49.

What is the essential difference between a self-audit and an independent audit?