Users of the Cloud Pak for Integration topology are noticing that the Integration Runtimes page in the platform navigator is displaying the following message: "Some runtimes cannot be created yet-Assuming that the users have the necessary permissions, what might cause this message to be displayed?

The Aspera. DataPower, or MQ operators have not been deployed.

The platform navigator operator has not been installed cluster-wide

The ibm-entitlement-key has not been added in same namespace as the platform navigator.

The API Connect operator has not been deployed.

In IBM Cloud Pak for Integration (CP4I), the Integration Runtimes page in the Platform Navigator provides an overview of available and deployable runtime components, such as IBM MQ, DataPower, API Connect, and Aspera.

When users see the message:

"Some runtimes cannot be created yet"

It typically indicates that one or more required operators have not been deployed. Each integration runtime requires its respective operator to be installed and running in order to create and manage instances of that runtime.

If the Aspera, DataPower, or MQ operators are missing, then their corresponding runtimes will not be available in the Platform Navigator.

The Platform Navigator relies on these operators to manage the lifecycle of integration components.

Even if users have the necessary permissions, without the required operators, the integration runtimes cannot be provisioned.

B. The platform navigator operator has not been installed cluster-wide

The Platform Navigator does not need to be installed cluster-wide for runtimes to be available.

If the Platform Navigator was missing, users would not even be able to access the Integration Runtimes page.

C. The ibm-entitlement-key has not been added in the same namespace as the platform navigator

The IBM entitlement key is required for pulling images from IBM’s container registry but does not affect the visibility of Integration Runtimes.

If the entitlement key were missing, installation of operators might fail, but this does not directly cause the displayed message.

D. The API Connect operator has not been deployed

While API Connect is a component of CP4I, its operator is not required for all integration runtimes.

The error message suggests multiple runtimes are unavailable, which means the issue is more likely related to multiple missing operators, such as Aspera, DataPower, or MQ.

Key Reasons for This Issue:Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration - Installing and Managing Operators

IBM Platform Navigator and Integration Runtimes

IBM MQ, DataPower, and Aspera Operators in CP4I

Which statement is true if multiple instances of Aspera HSTS exist in a clus-ter?

Each UDP port must be unique.

UDP and TCP ports have to be the same.

Each TCP port must be unique.

UDP ports must be the same.

In IBM Aspera High-Speed Transfer Server (HSTS), UDP ports are crucial for enabling high-speed data transfers. When multiple instances of Aspera HSTS exist in a cluster, each instance must be assigned a unique UDP port to avoid conflicts and ensure proper traffic routing.

Aspera HSTS relies on UDP for high-speed file transfers (as opposed to TCP, which is typically used for control and session management).

If multiple HSTS instances share the same UDP port, packet collisions and routing issues can occur.

Ensuring unique UDP ports across instances allows for proper load balancing and optimal performance.

B. UDP and TCP ports have to be the same.

Incorrect, because UDP and TCP serve different purposes in Aspera HSTS.

TCP is used for session initialization and control, while UDP is used for actual data transfer.

C. Each TCP port must be unique.

Incorrect, because TCP ports do not necessarily need to be unique across multiple instances, depending on the deployment.

TCP ports can be shared if proper load balancing and routing are configured.

D. UDP ports must be the same.

Incorrect, because using the same UDP port for multiple instances causes conflicts, leading to failed transfers or degraded performance.

Key Considerations for Aspera HSTS in a Cluster:Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Aspera HSTS Configuration Guide

IBM Cloud Pak for Integration - Aspera Setup

IBM Aspera High-Speed Transfer Overview

When considering storage for a highly available single-resilient queue manager, which statement is true?

A shared file system must be used that provides data write integrity, granting exclusive access to file and release locks on failure.

To tolerate an outage of an entire availability zone, cloud storage which replicates across two other zones must be used.

Persistent volumes are not supported for a resilient queue manager.

A single resilient queue manager takes much longer to recover than a multi instance queue manager

In IBM Cloud Pak for Integration (CP4I) v2021.2, when deploying a highly available single-resilient queue manager, storage considerations are crucial to ensuring fault tolerance and failover capability.

A single-resilient queue manager uses a shared file system that allows different queue manager instances to access the same data, enabling failover to another node in the event of failure. The key requirement is data write integrity, ensuring that only one instance has access at a time and that locks are properly released in case of a node failure.

Option A is correct: A shared file system must support data consistency and failover mechanisms to ensure that only one instance writes to the queue manager logs and data at any time. If the active instance fails, another instance can take over using the same storage.

Option B is incorrect: While cloud storage replication across availability zones is useful, it does not replace the need for a proper shared file system with write integrity.

Option C is incorrect: Persistent volumes are supported for resilient queue managers when deployed in Kubernetes environments like OpenShift, as long as they meet the required file system properties.

Option D is incorrect: A single resilient queue manager can recover quickly by failing over to a standby node, often faster than a multi-instance queue manager, which requires additional failover processes.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM MQ High Availability Documentation

IBM Cloud Pak for Integration Storage Considerations

IBM MQ Resiliency and Disaster Recovery Guide

What is the License Service's frequency of refreshing data?

1 hour.

30 seconds.

5 minutes.

30 minutes.

In IBM Cloud Pak Foundational Services, the License Service is responsible for collecting, tracking, and reporting license usage data. It ensures compliance by monitoring the consumption of IBM Cloud Pak licenses across the environment.

The License Service refreshes its data every 5 minutes to keep the license usage information up to date.

This frequent update cycle helps organizations maintain accurate tracking of their entitlements and avoid non-compliance issues.

A. 1 hour (Incorrect)

The License Service updates its records more frequently than every hour to provide timely insights.

B. 30 seconds (Incorrect)

A refresh interval of 30 seconds would be too frequent for license tracking, leading to unnecessary overhead.

C. 5 minutes (Correct)

The IBM License Service refreshes its data every 5 minutes, ensuring real-time tracking without excessive system load.

D. 30 minutes (Incorrect)

A 30-minute refresh would delay the reporting of license usage, which is not the actual behavior of the License Service.

Analysis of the Options:

IBM License Service Overview

IBM Cloud Pak License Service Data Collection Interval

IBM Cloud Pak Compliance and License Reporting

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What technology are OpenShift Pipelines based on?

Travis

Jenkins

Tekton

Argo CD

OpenShift Pipelines are based on Tekton, an open-source framework for building Continuous Integration/Continuous Deployment (CI/CD) pipelines natively in Kubernetes.

Tekton provides Kubernetes-native CI/CD functionality by defining pipeline resources as custom resources (CRDs) in OpenShift. This allows for scalable, cloud-native automation of software delivery.

Kubernetes-Native: Unlike Jenkins, which requires external servers or agents, Tekton runs natively in OpenShift/Kubernetes.

Serverless & Declarative: Pipelines are defined using YAML configurations, and execution is event-driven.

Reusable & Extensible: Developers can define Tasks, Pipelines, and Workspaces to create modular workflows.

Integration with GitOps: OpenShift Pipelines support Argo CD for GitOps-based deployment strategies.

Why Tekton is Used in OpenShift Pipelines?Example of a Tekton Pipeline Definition in OpenShift:apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: example-pipeline

spec:

tasks:

- name: echo-hello

taskSpec:

steps:

- name: echo

image: ubuntu

script: |

#!/bin/sh

echo "Hello, OpenShift Pipelines!"

A. Travis → ❌ Incorrect

Travis CI is a cloud-based CI/CD service primarily used for GitHub projects, but it is not used in OpenShift Pipelines.

B. Jenkins → ❌ Incorrect

OpenShift previously supported Jenkins-based CI/CD, but OpenShift Pipelines (Tekton) is now the recommended Kubernetes-native alternative.

Jenkins requires additional agents and servers, whereas Tekton runs serverless in OpenShift.

D. Argo CD → ❌ Incorrect

Argo CD is used for GitOps-based deployments, but it is not the underlying technology of OpenShift Pipelines.

Tekton and Argo CD can work together, but Argo CD alone does not handle CI/CD pipelines.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration CI/CD Pipelines

Red Hat OpenShift Pipelines (Tekton)

Tekton Pipelines Documentation

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which of the following would contain mqsc commands for queue definitions to be executed when new MQ containers are deployed?

MORegistry

CCDTJSON

Operatorlmage

ConfigMap

In IBM Cloud Pak for Integration (CP4I) v2021.2, when deploying IBM MQ containers in OpenShift, queue definitions and other MQSC (MQ Script Command) commands need to be provided to configure the MQ environment dynamically. This is typically done using a Kubernetes ConfigMap, which allows administrators to define and inject configuration files, including MQSC scripts, into the containerized MQ instance at runtime.

A ConfigMap in OpenShift or Kubernetes is used to store configuration data as key-value pairs or files.

For IBM MQ, a ConfigMap can include an MQSC script that contains queue definitions, channel settings, and other MQ configurations.

When a new MQ container is deployed, the ConfigMap is mounted into the container, and the MQSC commands are executed to set up the queues.

Why is ConfigMap the Correct Answer?Example Usage:A sample ConfigMap containing MQSC commands for queue definitions may look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: my-mq-config

data:

10-create-queues.mqsc: |

DEFINE QLOCAL('MY.QUEUE') REPLACE

DEFINE QLOCAL('ANOTHER.QUEUE') REPLACE

This ConfigMap can then be referenced in the MQ Queue Manager’s deployment configuration to ensure that the queue definitions are automatically executed when the MQ container starts.

A. MORegistry - Incorrect

The MORegistry is not a component used for queue definitions. Instead, it relates to Managed Objects in certain IBM middleware configurations.

B. CCDTJSON - Incorrect

CCDTJSON refers to Client Channel Definition Table (CCDT) in JSON format, which is used for defining MQ client connections rather than queue definitions.

C. OperatorImage - Incorrect

The OperatorImage contains the IBM MQ Operator, which manages the lifecycle of MQ instances in OpenShift, but it does not store queue definitions or execute MQSC commands.

IBM Documentation: Configuring IBM MQ with ConfigMaps

IBM MQ Knowledge Center: Using MQSC commands in Kubernetes ConfigMaps

IBM Redbooks: IBM Cloud Pak for Integration Deployment Guide

Analysis of Other Options:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which two App Connect resources enable callable flows to be processed between an integration solution in a cluster and an integration server in an on-premise system?

Sync server

Connectivity agent

Kafka sync

Switch server

Routing agent

In IBM App Connect, which is part of IBM Cloud Pak for Integration (CP4I), callable flows enable integration between different environments, including on-premises systems and cloud-based integration solutions deployed in an OpenShift cluster.

To facilitate this connectivity, two critical resources are used:

The Connectivity Agent acts as a bridge between cloud-hosted App Connect instances and on-premises integration servers.

It enables secure bidirectional communication by allowing callable flows to connect between cloud-based and on-premise integration servers.

This is essential for hybrid cloud integrations, where some components remain on-premises for security or compliance reasons.

The Routing Agent directs incoming callable flow requests to the appropriate App Connect integration server based on configured routing rules.

It ensures low-latency and efficient message routing between cloud and on-premise systems, making it a key component for hybrid integrations.

1. Connectivity Agent (✅ Correct Answer)2. Routing Agent (✅ Correct Answer)

Why the Other Options Are Incorrect?Option

Explanation

Correct?

A. Sync server

❌ Incorrect – There is no "Sync Server" component in IBM App Connect. Synchronization happens through callable flows, but not via a "Sync Server".

❌

C. Kafka sync

❌ Incorrect – Kafka is used for event-driven messaging, but it is not required for callable flows between cloud and on-premises environments.

❌

D. Switch server

❌ Incorrect – No such component called "Switch Server" exists in App Connect.

❌

Final Answer:✅ B. Connectivity agent✅ E. Routing agent

IBM App Connect - Callable Flows Documentation

IBM Cloud Pak for Integration - Hybrid Connectivity with Connectivity Agents

IBM App Connect Enterprise - On-Premise and Cloud Integration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An administrator has been given an OpenShift Cluster to install Cloud Pak for Integration.

What can the administrator use to determine if the infrastructure pre-requisites are met by the cluster?

Use the oc cluster status command to dump the node capacity and utilization statistics for all the nodes.

Use the oc get nodes -o wide command to obtain the capacity and utilization statistics of memory and compute of the nodes.

Use the oc Use the oc describe nodes command to dump the node capacity and utilization statistics for all the nodes.

Use the adm top nodes command to obtain the capacity and utilization statistics of memory and compute of the nodes.

Before installing IBM Cloud Pak for Integration (CP4I) on an OpenShift Cluster, an administrator needs to verify that the cluster meets the infrastructure prerequisites such as CPU, memory, and overall resource availability.

The most effective way to check real-time resource utilization and capacity is by using the oc adm top nodes command.

This command provides a summary of CPU and memory usage for all nodes in the cluster.

It is part of the OpenShift administrator CLI (oc adm), which is designed for cluster-wide management.

The information is fetched from Metrics Server or Prometheus, giving accurate real-time data.

It helps administrators assess whether the cluster has sufficient resources to support CP4I deployment.

Why is oc adm top nodes the correct answer?Example usage:oc adm top nodes

Example Output:scss

Copy

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

worker-node-1 1500m 30% 8Gi 40%

worker-node-2 1200m 25% 6Gi 30%

Why the Other Options Are Incorrect?Option

Explanation

Correct?

A. Use the oc cluster status command to dump the node capacity and utilization statistics for all the nodes.

❌ Incorrect – The oc cluster status command does not exist in OpenShift CLI. Instead, oc status gives a summary of the cluster but does not provide node resource utilization.

❌

B. Use the oc get nodes -o wide command to obtain the capacity and utilization statistics of memory and compute of the nodes.

❌ Incorrect – The oc get nodes -o wide command only shows basic node details like OS, internal/external IPs, and roles. It does not display CPU or memory utilization.

❌

C. Use the oc describe nodes command to dump the node capacity and utilization statistics for all the nodes.

❌ Incorrect – The oc describe nodes command provides detailed information about a node, but not real-time resource usage. It lists allocatable resources but does not give current utilization stats.

❌

Final Answer:✅ D. Use the oc adm top nodes command to obtain the capacity and utilization statistics of memory and compute of the nodes.

IBM Cloud Pak for Integration - OpenShift Infrastructure Requirements

Red Hat OpenShift CLI Reference - oc adm top nodes

Red Hat OpenShift Documentation - Resource Monitoring

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

How many Cloud Pak for Integration licenses will the non-production environment cost as compared to the production environment when deploying API Connect. App Connect Enterprise, and MQ?

The same amount

Half as many.

More than half as many.

More information is needed to determine the cost.

IBM Cloud Pak for Integration (CP4I) licensing follows Virtual Processor Core (VPC)-based pricing, where licensing requirements differ between production and non-production environments.

For non-production environments, IBM typically requires half the number of VPC licenses compared to production environments when deploying components like API Connect, App Connect Enterprise, and IBM MQ.

This 50% reduction applies because IBM offers a non-production environment discount, which allows enterprises to use fewer VPCs for testing, development, and staging while still maintaining functionality.

IBM provides reduced VPC license requirements for non-production environments to lower costs.

The licensing ratio is generally 1:2 (Non-Production:Production), meaning the non-production environment will require half the licenses compared to production.

This policy is commonly applied to major CP4I components, including:

IBM API Connect

IBM App Connect Enterprise

IBM MQ

A. The same amount → Incorrect

Non-production environments typically require half the VPC licenses, not the same amount.

C. More than half as many → Incorrect

IBM’s standard licensing policy offers at least a 50% reduction, so this is not correct.

D. More information is needed to determine the cost. → Incorrect

While pricing details depend on contract terms, IBM has a standard non-production licensing policy, making it predictable.

Why Answer B is Correct?Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Licensing Guide

IBM Cloud Pak VPC Licensing

IBM MQ Licensing Details

IBM API Connect Licensing

IBM App Connect Enterprise Licensing

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An administrator has configured OpenShift Container Platform (OCP) log forwarding to external third-party systems. What is expected behavior when the external logging aggregator becomes unavailable and the collected logs buffer size has been completely filled?

OCP rotates the logs and deletes them.

OCP store the logs in a temporary PVC.

OCP extends the buffer size and resumes logs collection.

The Fluentd daemon is forced to stop.

In IBM Cloud Pak for Integration (CP4I) v2021.2, which runs on OpenShift Container Platform (OCP), administrators can configure log forwarding to an external log aggregator (e.g., Elasticsearch, Splunk, or Loki).

OCP uses Fluentd as the log collector, and when log forwarding fails due to the external logging aggregator becoming unavailable, the following happens:

Fluentd buffers the logs in memory (up to a defined limit).

If the buffer reaches its maximum size, OCP follows its default log management policy:

Older logs are rotated and deleted to make space for new logs.

This prevents excessive storage consumption on the OpenShift cluster.

This behavior ensures that the logging system does not stop functioning but rather manages storage efficiently by deleting older logs once the buffer is full.

Log rotation is a default behavior in OCP when storage limits are reached.

If logs cannot be forwarded and the buffer is full, OCP deletes old logs to continue operations.

This is a standard logging mechanism to prevent resource exhaustion.

Why Answer A is Correct?

B. OCP stores the logs in a temporary PVC. → Incorrect

OCP does not automatically store logs in a Persistent Volume Claim (PVC).

Logs are buffered in memory and not redirected to PVC storage unless explicitly configured.

C. OCP extends the buffer size and resumes log collection. → Incorrect

The buffer size is fixed and does not dynamically expand.

Instead of increasing the buffer, older logs are rotated out when the limit is reached.

D. The Fluentd daemon is forced to stop. → Incorrect

Fluentd does not stop when the external log aggregator is down.

It continues collecting logs, buffering them until the limit is reached, and then follows log rotation policies.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Logging and Monitoring

OpenShift Logging Overview

Fluentd Log Forwarding in OpenShift

OpenShift Log Rotation and Retention Policy

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Assuming thai IBM Common Services are installed in the ibm-common-services namespace and the Cloud Pak for Integration is installed in the cp4i namespace, what is needed for the authentication to the License Service APIs?

A token available in ibm-licensing-token secret in the cp4i namespace.

A password available in platform-auth-idp-credentials in the ibm-common-services namespace.

A password available in ibm-entitlement-key key in the cp4i namespace.

A token available in ibm-licensing-token secret in the ibm-common-services namespace.

IBM Cloud Pak for Integration (CP4I) relies on IBM Common Services for authentication, licensing, and other foundational functionalities. The License Service API is a key component that enables the monitoring and reporting of software license usage across the cluster.

Authentication to the License Service APITo authenticate to the IBM License Service APIs, a token is required, which is stored in the ibm-licensing-token secret within the ibm-common-services namespace (where IBM Common Services are installed).

When Cloud Pak for Integration (installed in the cp4i namespace) needs to interact with the License Service API, it retrieves the authentication token from this secret in the ibm-common-services namespace.

The ibm-licensing-token secret is automatically created in the ibm-common-services namespace when the IBM License Service is deployed.

This token is required for authentication when querying licensing information via the License Service API.

Since IBM Common Services are installed in ibm-common-services, and the licensing service is part of these foundational services, authentication tokens are stored in this namespace rather than the cp4i namespace.

Why is Option D Correct?

Analysis of Other Options:Option

Correct/Incorrect

Reason

A. A token available in ibm-licensing-token secret in the cp4i namespace.

❌ Incorrect

The licensing token is stored in the ibm-common-services namespace, not in cp4i.

B. A password available in platform-auth-idp-credentials in the ibm-common-services namespace.

❌ Incorrect

This secret is related to authentication for the IBM Identity Provider (OIDC) and is not used for licensing authentication.

C. A password available in ibm-entitlement-key in the cp4i namespace.

❌ Incorrect

The ibm-entitlement-key is used for accessing IBM Container Registry to pull images, not for licensing authentication.

D. A token available in ibm-licensing-token secret in the ibm-common-services namespace.

✅ Correct

This is the correct secret that contains the required token for authentication to the License Service API.

IBM Documentation: IBM License Service Authentication and Tokens

IBM Knowledge Center: Managing License Service in OpenShift

IBM Redbooks: IBM Cloud Pak for Integration Deployment Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which of the following contains sensitive data to be injected when new IBM MO containers are deployed?

Replicator

MQRegistry

DeploymentConflg

Secret

In IBM Cloud Pak for Integration (CP4I) v2021.2, when new IBM MQ (Message Queue) containers are deployed, sensitive data such as passwords, credentials, and encryption keys must be securely injected into the container environment.

The correct Kubernetes object for storing and injecting sensitive data is a Secret.

Kubernetes Secrets securely store sensitive data

Secrets allow IBM MQ containers to retrieve authentication credentials (e.g., admin passwords, TLS certificates, and API keys) without exposing them in environment variables or config maps.

Unlike ConfigMaps, Secrets are encrypted and access-controlled, ensuring security compliance.

Used by IBM MQ Operator

When deploying IBM MQ in OpenShift/Kubernetes, the MQ operator references Secrets to inject necessary credentials into MQ containers.

Example:

Why is "Secret" the correct answer?apiVersion: v1

kind: Secret

metadata:

name: mq-secret

type: Opaque

data:

mq-password: bXlxYXNzd29yZA==

The MQ container can then access this mq-password securely.

Prevents hardcoding sensitive data

Instead of storing passwords directly in deployment files, using Secrets enhances security and compliance with enterprise security standards.

Why are the other options incorrect?❌ A. Replicator

The Replicator is responsible for synchronizing and replicating messages across MQ queues but does not store sensitive credentials.

❌ B. MQRegistry

The MQRegistry is used for tracking queue manager details but does not manage sensitive data injection.

It mainly helps with queue manager registration and configuration.

❌ C. DeploymentConfig

A DeploymentConfig in OpenShift defines how pods should be deployed but does not handle sensitive data injection.

Instead, DeploymentConfig can reference a Secret, but it does not store sensitive information itself.

IBM MQ Security - Kubernetes Secrets

IBM Docs – Securely Managing MQ in Kubernetes

IBM Cloud Pak for Integration Knowledge Center

Covers how Secrets are used in MQ container deployments.

Red Hat OpenShift Documentation – Kubernetes Secrets

Secrets in Kubernetes

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

If the App Connect Operator is installed in a restricted network, which statement is true?

Simple Storage Service (S3) can not be used for BAR files.

Ephemeral storage can not be used for BAR files.

Only Ephemeral storage is supported for BAR files.

Persistent Claim storage can not be used for BAR files.

In IBM Cloud Pak for Integration (CP4I) v2021.2, when App Connect Operator is deployed in a restricted network (air-gapped environment), access to external cloud-based services (such as AWS S3) is typically not available.

A restricted network means no direct internet access, so external storage services like Amazon S3 cannot be used to store Broker Archive (BAR) files.

BAR files contain packaged integration flows for IBM App Connect Enterprise (ACE).

In restricted environments, administrators must use internal storage options, such as:

Persistent Volume Claims (PVCs)

Ephemeral storage (temporary, in-memory storage)

Why Option A (S3 Cannot Be Used) is Correct:

B. Ephemeral storage cannot be used for BAR files. → Incorrect

Ephemeral storage is supported but is not recommended for production because data is lost when the pod restarts.

C. Only Ephemeral storage is supported for BAR files. → Incorrect

Both Ephemeral storage and Persistent Volume Claims (PVCs) are supported for storing BAR files.

Ephemeral storage is not the only option.

D. Persistent Claim storage cannot be used for BAR files. → Incorrect

Persistent Volume Claims (PVCs) are a supported and recommended method for storing BAR files in a restricted network.

This ensures that integration flows persist even if a pod is restarted or redeployed.

Explanation of Incorrect Answers:

IBM App Connect Enterprise - BAR File Storage Options

IBM Cloud Pak for Integration Storage Considerations

IBM Cloud Pak for Integration Deployment in Restricted Environments

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Select all that apply

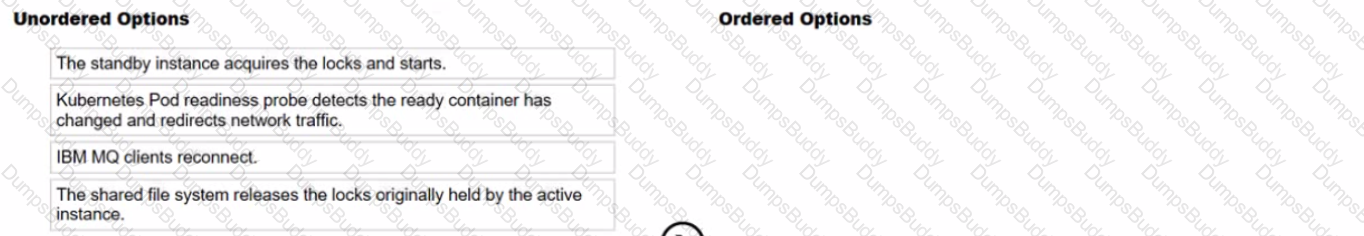

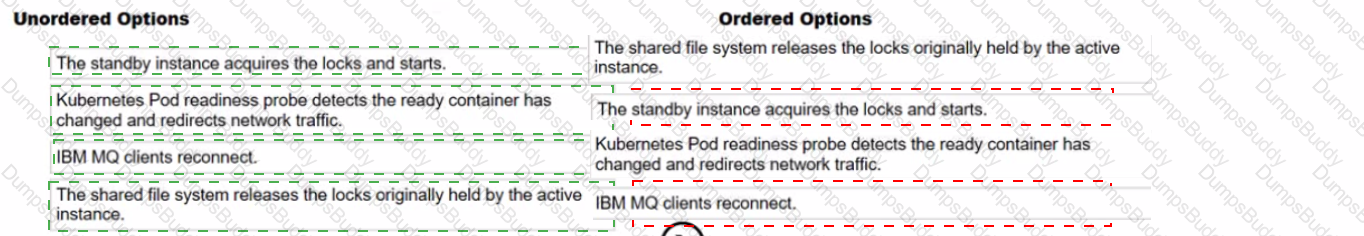

What is the correct sequence of steps of how a multi-instance queue manager failover occurs?

1️⃣ The shared file system releases the locks originally held by the active instance.

When the active IBM MQ instance fails, the shared storage system releases the file locks, allowing the standby instance to take over.

2️⃣ The standby instance acquires the locks and starts.

The standby queue manager detects the failure, acquires the released locks, and promotes itself to the active instance.

3️⃣ Kubernetes Pod readiness probe detects the ready container has changed and redirects network traffic.

OpenShift/Kubernetes detects that the active instance has changed and updates its internal routing to redirect connections to the new active queue manager.

4️⃣ IBM MQ clients reconnect.

MQ clients reconnect to the new active queue manager using automatic reconnect mechanisms such as Client Auto Reconnect (MQCNO) or channel reconnect options.

Comprehensive Detailed Explanation:

In IBM Cloud Pak for Integration (CP4I) v2021.2, multi-instance queue managers use a shared file system to enable failover. The failover process follows these key steps:

Release of Locks:

The active queue manager holds a lock on shared storage.

When it fails, the lock is released, allowing the standby instance to take over.

Standby Becomes Active:

The standby queue manager acquires the released locks and promotes itself to active.

Network Traffic Redirection:

Kubernetes/OpenShift detects the pod status change using readiness probes and redirects network traffic to the new active instance.

Client Reconnection:

IBM MQ clients reconnect to the new active instance, ensuring minimal downtime.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM MQ Multi-Instance Queue Manager Failover

Kubernetes Readiness and Liveness Probes

IBM MQ High Availability Using Shared Storage

An administrator is checking that all components and software in their estate are licensed. They have only purchased Cloud Pak for Integration (CP41) li-censes.

How are the OpenShift master nodes licensed?

CP41 licenses include entitlement for the entire OpenShift cluster that they run on, and the administrator can count against the master nodes.

OpenShift master nodes do not consume OpenShift license entitlement, so no license is needed.

The administrator will need to purchase additional OpenShift licenses to cover the master nodes.

CP41 licenses include entitlement for 3 cores of OpenShift per core of CP41.

In IBM Cloud Pak for Integration (CP4I) v2021.2, licensing is based on Virtual Processor Cores (VPCs), and it includes entitlement for OpenShift usage. However, OpenShift master nodes (control plane nodes) do not consume license entitlement, because:

OpenShift licensing only applies to worker nodes.

The master nodes (control plane nodes) manage cluster operations and scheduling, but they do not run user workloads.

IBM’s Cloud Pak licensing model considers only the worker nodes for licensing purposes.

Master nodes are essential infrastructure and are excluded from entitlement calculations.

IBM and Red Hat do not charge for OpenShift master nodes in Cloud Pak deployments.

A. CP4I licenses include entitlement for the entire OpenShift cluster that they run on, and the administrator can count against the master nodes. → ❌ Incorrect

CP4I licenses do cover OpenShift, but only for worker nodes where workloads are deployed.

Master nodes are excluded from licensing calculations.

C. The administrator will need to purchase additional OpenShift licenses to cover the master nodes. → ❌ Incorrect

No additional OpenShift licenses are required for master nodes.

OpenShift licensing only applies to worker nodes that run applications.

D. CP4I licenses include entitlement for 3 cores of OpenShift per core of CP4I. → ❌ Incorrect

The standard IBM Cloud Pak licensing model provides 1 VPC of OpenShift for 1 VPC of CP4I, not a 3:1 ratio.

Additionally, this applies only to worker nodes, not master nodes.

Explanation of Incorrect Answers:

IBM Cloud Pak Licensing Guide

IBM Cloud Pak for Integration Licensing Details

Red Hat OpenShift Licensing Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which two of the following support Cloud Pak for Integration deployments?

IBM Cloud Code Engine

Amazon Web Services

Microsoft Azure

IBM Cloud Foundry

Docker

IBM Cloud Pak for Integration (CP4I) v2021.2 is designed to run on containerized environments that support Red Hat OpenShift, which can be deployed on various public clouds and on-premises environments. The two correct options that support CP4I deployments are:

Amazon Web Services (AWS) (Option B) ✅

AWS supports IBM Cloud Pak for Integration via Red Hat OpenShift on AWS (ROSA) or self-managed OpenShift clusters running on AWS EC2 instances.

CP4I components such as API Connect, App Connect, MQ, and Event Streams can be deployed on OpenShift running on AWS.

Which two Red Hat OpenShift Operators should be installed to enable OpenShift Logging?

OpenShift Console Operator

OpenShift Logging Operator

OpenShift Log Collector

OpenShift Centralized Logging Operator

OpenShift Elasticsearch Operator

In IBM Cloud Pak for Integration (CP4I) v2021.2, which runs on Red Hat OpenShift, logging is a critical component for monitoring cluster and application activities. To enable OpenShift Logging, two key operators must be installed:

OpenShift Logging Operator (B)

This operator is responsible for managing the logging stack in OpenShift.

It helps configure and deploy logging components like Fluentd, Kibana, and Elasticsearch within the OpenShift cluster.

It provides a unified way to collect and visualize logs across different workloads.

OpenShift Elasticsearch Operator (E)

This operator manages the Elasticsearch cluster, which is the central data store for log aggregation in OpenShift.

Elasticsearch stores logs collected from cluster nodes and applications, making them searchable and analyzable via Kibana.

Without this operator, OpenShift Logging cannot function, as it depends on Elasticsearch for log storage.

A. OpenShift Console Operator → Incorrect

The OpenShift Console Operator manages the web UI of OpenShift but has no role in logging.

It does not collect, store, or manage logs.

C. OpenShift Log Collector → Incorrect

There is no official OpenShift component or operator named "OpenShift Log Collector."

Log collection is handled by Fluentd, which is managed by the OpenShift Logging Operator.

D. OpenShift Centralized Logging Operator → Incorrect

This is not a valid OpenShift operator.

The correct operator for centralized logging is OpenShift Logging Operator.

Explanation of Incorrect Answers:

OpenShift Logging Overview

OpenShift Logging Operator Documentation

OpenShift Elasticsearch Operator Documentation

IBM Cloud Pak for Integration Logging Configuration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

When using the Platform Navigator, what permission is required to add users and user groups?

root

Super-user

Administrator

User

In IBM Cloud Pak for Integration (CP4I) v2021.2, the Platform Navigator is the central UI for managing integration capabilities, including user and access control. To add users and user groups, the required permission level is Administrator.

User Management Capabilities:

The Administrator role in Platform Navigator has full access to user and group management functions, including:

Adding new users

Assigning roles

Managing access policies

RBAC (Role-Based Access Control) Enforcement:

CP4I enforces RBAC to restrict actions based on roles.

Only Administrators can modify user access, ensuring security compliance.

Access Control via OpenShift and IAM Integration:

User management in CP4I integrates with IBM Cloud IAM or OpenShift User Management.

The Administrator role ensures correct permissions for authentication and authorization.

Why is "Administrator" the Correct Answer?

Why Not the Other Options?Option

Reason for Exclusion

A. root

"root" is a Linux system user and not a role in Platform Navigator. CP4I does not grant UI-based root access.

B. Super-user

No predefined "Super-user" role exists in CP4I. If referring to an elevated user, it still does not match the Administrator role in Platform Navigator.

D. User

Regular "User" roles have view-only or limited permissions and cannot manage users or groups.

Thus, the Administrator role is the correct choice for adding users and user groups in Platform Navigator.

IBM Cloud Pak for Integration - Platform Navigator Overview

Managing Users in Platform Navigator

Role-Based Access Control in CP4I

OpenShift User Management and Authentication

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is the effect of creating a second medium size profile?

The first profile will be replaced by the second profile.

The second profile will be configured with a medium size.

The first profile will be re-configured with a medium size.

The second profile will be configured with a large size.

In IBM Cloud Pak for Integration (CP4I) v2021.2, profiles define the resource allocation and configuration settings for deployed services. When creating a second medium-size profile, the system will allocate the resources according to the medium-size specifications, without affecting the first profile.

IBM Cloud Pak for Integration supports multiple profiles, each with its own resource allocation.

When a second medium-size profile is created, it is independently assigned the medium-size configuration without modifying the existing profiles.

This allows multiple services to run with similar resource constraints but remain separately managed.

Why Option B is Correct:

A. The first profile will be replaced by the second profile. → ❌ Incorrect

Creating a new profile does not replace an existing profile; each profile is independent.

C. The first profile will be re-configured with a medium size. → ❌ Incorrect

The first profile remains unchanged. A second profile does not modify or reconfigure an existing one.

D. The second profile will be configured with a large size. → ❌ Incorrect

The second profile will retain the specified medium size and will not be automatically upgraded to a large size.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Sizing and Profiles

Managing Profiles in IBM Cloud Pak for Integration

OpenShift Resource Allocation for CP4I

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which two authentication types support single sign-on?

2FA

Enterprise LDAP

Plain text over HTTPS

Enterprise SSH

OpenShift authentication

Single Sign-On (SSO) is an authentication mechanism that allows users to log in once and gain access to multiple applications without re-entering credentials. In IBM Cloud Pak for Integration (CP4I), Enterprise LDAP and OpenShift authentication both support SSO.

Enterprise LDAP (B) – ✅ Supports SSO

Lightweight Directory Access Protocol (LDAP) is commonly used in enterprises for centralized authentication.

CP4I can integrate with Enterprise LDAP, allowing users to authenticate once and access multiple cloud services without needing separate logins.

OpenShift Authentication (E) – ✅ Supports SSO

OpenShift provides OAuth-based authentication, enabling SSO across multiple OpenShift-integrated services.

CP4I uses OpenShift’s built-in identity provider to allow seamless user authentication across different Cloud Pak components.

A. 2FA (Incorrect):

Two-Factor Authentication (2FA) enhances security by requiring an additional verification step but does not inherently support SSO.

C. Plain Text over HTTPS (Incorrect):

Plain text authentication is insecure and does not support SSO.

D. Enterprise SSH (Incorrect):

SSH authentication is used for remote access to servers but is not related to SSO.

Analysis of the Incorrect Options:

IBM Cloud Pak for Integration Authentication & SSO Guide

Red Hat OpenShift Authentication and Identity Providers

IBM Cloud Pak - Integrating with Enterprise LDAP

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which two OpenShift project names can be used for installing the Cloud Pak for Integration operator?

openshift-infra

openshift

default

cp4i

openshift-cp4i

When installing the Cloud Pak for Integration (CP4I) operator on OpenShift, administrators must select an appropriate OpenShift project (namespace).

IBM recommends using dedicated namespaces for CP4I installation to ensure proper isolation and resource management. The two commonly used namespaces are:

cp4i → A custom namespace that administrators often create specifically for CP4I components.

openshift-cp4i → A namespace prefixed with openshift-, often used in managed environments or to align with OpenShift conventions.

Both of these namespaces are valid for CP4I installation.

A. openshift-infra → ❌ Incorrect

The openshift-infra namespace is reserved for internal OpenShift infrastructure components (e.g., monitoring and networking).

It is not intended for application or operator installations.

B. openshift → ❌ Incorrect

The openshift namespace is a protected namespace used by OpenShift’s core services.

Installing CP4I in this namespace can cause conflicts and is not recommended.

C. default → ❌ Incorrect

The default namespace is a generic OpenShift project that lacks the necessary role-based access control (RBAC) configurations for CP4I.

Using this namespace can lead to security and permission issues.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Installation Guide

OpenShift Namespace Best Practices

IBM Cloud Pak for Integration Operator Deployment

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

https://www.ibm.com/docs/en/cloud-paks/cp-integration/2021.2?topic=installing-operators

Which statement is true about the Authentication URL user registry in API Connect?

It authenticates Developer Portal sites.

It authenticates users defined in a provider organization.

It authenticates Cloud Manager users.

It authenticates users by referencing a custom identity provider.

In IBM API Connect, an Authentication URL user registry is a type of user registry that allows authentication by delegating user verification to an external identity provider. This is typically used when API Connect needs to integrate with custom authentication mechanisms, such as OAuth, OpenID Connect, or SAML-based identity providers.

When configured, API Connect does not store user credentials locally. Instead, it redirects authentication requests to the specified external authentication URL, and if the response is valid, the user is authenticated.

The Authentication URL user registry is specifically designed to reference an external custom identity provider.

This enables API Connect to integrate with external authentication systems like LDAP, Active Directory, OAuth, and OpenID Connect.

It is commonly used for single sign-on (SSO) and enterprise authentication strategies.

Why Answer D is Correct:

A. It authenticates Developer Portal sites. → Incorrect

The Developer Portal uses its own authentication mechanisms, such as LDAP, local user registries, and external identity providers, but the Authentication URL user registry does not authenticate Developer Portal users directly.

B. It authenticates users defined in a provider organization. → Incorrect

Users in a provider organization (such as API providers and administrators) are typically authenticated using Cloud Manager or an LDAP-based user registry, not via an Authentication URL user registry.

C. It authenticates Cloud Manager users. → Incorrect

Cloud Manager users are typically authenticated via LDAP or API Connect’s built-in user registry.

The Authentication URL user registry is not responsible for Cloud Manager authentication.

Explanation of Incorrect Answers:

IBM API Connect User Registry Types

IBM API Connect Authentication and User Management

IBM Cloud Pak for Integration Documentation

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

https://www.ibm.com/docs/SSMNED_v10/com.ibm.apic.cmc.doc/capic_cmc_registries_concepts.html

Which Kubernetes resource can be queried to determine if the API Connect op-erator installation has a status of 'succeeded?

The API Connect InstallPlan.

The API Connect ClusterServiceVersion.

The API Connect Operator subscription.

The API Connect Operator Pod.

In IBM Cloud Pak for Integration (CP4I) v2021.2, when installing the API Connect Operator, it is crucial to monitor its deployment status to ensure a successful installation. This is typically done using ClusterServiceVersion (CSV), which is a Kubernetes resource managed by the Operator Lifecycle Manager (OLM).

The ClusterServiceVersion (CSV) represents the state of an operator and provides details about its installation, upgrades, and available APIs. The status field within the CSV object contains the installation progress and indicates whether the installation was successful (Succeeded), is still in progress (Installing), or has failed (Failed).

To query the status of the API Connect operator installation, you can run the following command:

kubectl get csv -n

or

kubectl describe csv

This command will return details about the CSV, including its "Phase", which should be "Succeeded" if the installation is complete.

A. The API Connect InstallPlan – While the InstallPlan is responsible for tracking the installation process of the operator, it does not explicitly indicate whether the installation was completed successfully.

C. The API Connect Operator Subscription – The Subscription resource ensures that the operator is installed and updated, but it does not provide a direct success or failure status of the installation.

D. The API Connect Operator Pod – Checking the Pod status only shows if the Operator is running but does not confirm whether the installation process itself was completed successfully.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration Knowledge Center

IBM API Connect Documentation

IBM OLM ClusterServiceVersion Reference

Kubernetes Official Documentation on CSV

Which capability describes and catalogs the APIs of Kafka event sources and socializes those APIs with application developers?

Gateway Endpoint Management

REST Endpoint Management

Event Endpoint Management

API Endpoint Management

In IBM Cloud Pak for Integration (CP4I) v2021.2, Event Endpoint Management (EEM) is the capability that describes, catalogs, and socializes APIs for Kafka event sources with application developers.

Event Endpoint Management (EEM) allows developers to discover and consume Kafka event sources in a structured way, similar to how REST APIs are managed in an API Gateway.

It provides a developer portal where event-driven APIs can be exposed, documented, and consumed by applications.

It helps organizations share event-driven APIs with internal teams or external consumers, enabling seamless event-driven integrations.

Why "Event Endpoint Management" is the Correct Answer?

Why the Other Options Are Incorrect?Option

Explanation

Correct?

A. Gateway Endpoint Management

❌ Incorrect – Gateway endpoint management refers to managing API Gateway endpoints for routing and securing APIs, but it does not focus on event-driven APIs like Kafka.

❌

B. REST Endpoint Management

❌ Incorrect – REST Endpoint Management deals with traditional RESTful APIs, not event-driven APIs for Kafka.

❌

D. API Endpoint Management

❌ Incorrect – API Endpoint Management is a generic term for managing APIs but does not specifically focus on event-driven APIs for Kafka.

❌

Final Answer:✅ C. Event Endpoint Management

IBM Cloud Pak for Integration – Event Endpoint Management

IBM Event Endpoint Management Documentation

Kafka API Discovery & Management in IBM CP4I

Which statement is true about the removal of individual subsystems of API Connect on OpenShift or Cloud Pak for Integration?

They can be deleted regardless of the deployment methods.

They can be deleted if API Connect was deployed using a single top level CR.

They cannot be deleted if API Connect was deployed using a single top level CR.

They cannot be deleted if API Connect was deployed using a single top level CRM.

In IBM Cloud Pak for Integration (CP4I) v2021.2, when deploying API Connect on OpenShift or within the Cloud Pak for Integration framework, there are different deployment methods:

Single Top-Level Custom Resource (CR) – This method deploys all API Connect subsystems as a single unit, meaning they are managed together. Removing individual subsystems is not supported when using this deployment method. If you need to remove a subsystem, you must delete the entire API Connect instance.

Multiple Independent Custom Resources (CRs) – This method allows more granular control, enabling the deletion of individual subsystems without affecting the entire deployment.

Since the question specifically asks about API Connect deployed using a single top-level CR, it is not possible to delete individual subsystems. The entire deployment must be deleted and reconfigured if changes are required.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM API Connect v10 Documentation: IBM Docs - API Connect on OpenShift

IBM Cloud Pak for Integration Knowledge Center: IBM CP4I Documentation

API Connect Deployment Guide: Managing API Connect Subsystems

What is a prerequisite for setting a custom certificate when replacing the default ingress certificate?

The new certificate private key must be unencrypted.

The certificate file must have only a single certificate.

The new certificate private key must be encrypted.

The new certificate must be self-signed certificate.

When replacing the default ingress certificate in IBM Cloud Pak for Integration (CP4I) v2021.2, one critical requirement is that the private key associated with the new certificate must be unencrypted.

OpenShift’s Ingress Controller (which CP4I uses) requires an unencrypted private key to properly load and use the custom TLS certificate.

Encrypted private keys would require manual decryption each time the ingress controller starts, which is not supported for automation.

The custom certificate and its key are stored in a Kubernetes secret, which already provides encryption at rest, making additional encryption unnecessary.

Why Option A (Unencrypted Private Key) is Correct:To apply a new custom certificate for ingress, the process typically involves:

Creating a Kubernetes secret containing the unencrypted private key and certificate:

sh

CopyEdit

oc create secret tls custom-ingress-cert \

--cert=custom.crt \

--key=custom.key -n openshift-ingress

Updating the OpenShift Ingress Controller configuration to use the new secret.

B. The certificate file must have only a single certificate. → ❌ Incorrect

The certificate file can contain a certificate chain, including intermediate and root certificates, to ensure proper validation by clients.

It is not limited to a single certificate.

C. The new certificate private key must be encrypted. → ❌ Incorrect

If the private key is encrypted, OpenShift cannot automatically use it without requiring a decryption passphrase, which is not supported for automated deployments.

D. The new certificate must be a self-signed certificate. → ❌ Incorrect

While self-signed certificates can be used, they are not mandatory.

Administrators typically use certificates from trusted Certificate Authorities (CAs) to avoid browser security warnings.

Explanation of Incorrect Answers:

Replacing the default ingress certificate in OpenShift

IBM Cloud Pak for Integration Security Configuration

OpenShift Ingress TLS Certificate Management

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An administrator is installing Cloud Pak for Integration onto an OpenShift cluster that does not have access to the internet.

How do they provide their ibm-entitlement-key when mirroring images to a portable registry?

The administrator uses a cloudctl case command to configure credentials for registries which require authentication before mirroring the images.

The administrator sets the key with "export ENTITLEMENTKEY" and then uses the "cloudPakOfflmelnstaller -mirror-images" script to mirror the images

The administrator adds the entitlement-key to the properties file SHOME/.airgap/registries on the Bastion Host.

The ibm-entitlement-key is added as a docker-registry secret onto the OpenShift cluster.

When installing IBM Cloud Pak for Integration (CP4I) on an OpenShift cluster that lacks internet access, an air-gapped installation is required. This process involves mirroring container images from an IBM container registry to a portable registry that can be accessed by the disconnected OpenShift cluster.

To authenticate and mirror images, the administrator must:

Use the cloudctl case command to configure credentials, including the IBM entitlement key, before initiating the mirroring process.

Authenticate with the IBM Container Registry using the entitlement key.

Mirror the required images from IBM’s registry to a local registry that the disconnected OpenShift cluster can access.

B. export ENTITLEMENTKEY and cloudPakOfflineInstaller -mirror-images

The command cloudPakOfflineInstaller -mirror-images is not a valid IBM Cloud Pak installation step.

IBM requires the use of cloudctl case commands for air-gapped installations.

C. Adding the entitlement key to .airgap/registries

There is no documented requirement to store the entitlement key in SHOME/.airgap/registries.

IBM Cloud Pak for Integration does not use this file for authentication.

D. Adding the ibm-entitlement-key as a Docker secret

While secrets are used in OpenShift for image pulling, they are not directly involved in mirroring images for air-gapped installations.

The entitlement key is required at the mirroring step, not when deploying the images.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Documentation: Installing Cloud Pak for Integration in an Air-Gapped Environment

IBM Cloud Pak Entitlement Key and Image Mirroring

OpenShift Air-Gapped Installation Guide

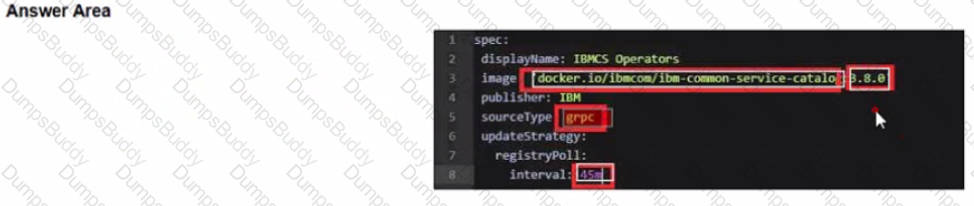

Before upgrading the Foundational Services installer version, the installer catalog source image must have the correct tag. To always use the latest catalog click on where the text 'latest' should be inserted into the image below?

Upgrading from version 3.4.x and 3.5.x to version 3.6.x

Before you upgrade the foundational services installer version, make sure that the installer catalog source image has the correct tag.

If, during installation, you had set the catalog source image tag as latest, you do not need to manually change the tag.

If, during installation, you had set the catalog source image tag to a specific version, you must update the tag with the version that you want to upgrade to. Or, you can change the tag to latest to automatically complete future upgrades to the most current version.

To update the tag, complete the following actions.

To update the catalog source image tag, run the following command.

oc edit catalogsource opencloud-operators -n openshift-marketplace

Update the image tag.

Change image tag to the specific version of 3.6.x. The 3.6.3 tag is used as an example here:

spec:

displayName: IBMCS Operators

image: 'docker.io/ibmcom/ibm-common-service-catalog:3.6.3'

publisher: IBM

sourceType: grpc

updateStrategy:

registryPoll:

interval: 45m

Change the image tag to latest to automatically upgrade to the most current version.

spec:

displayName: IBMCS Operators

image: 'icr.io/cpopen/ibm-common-service-catalog:latest'

publisher: IBM

sourceType: grpc

updateStrategy:

registryPoll:

interval: 45m

To check whether the image tag is successfully updated, run the following command:

oc get catalogsource opencloud-operators -n openshift-marketplace -o jsonpath='{.spec.image}{"\n"}{.status.connectionState.lastObservedState}'

The following sample output has the image tag and its status:

icr.io/cpopen/ibm-common-service-catalog:latest

READY%

https://www.ibm.com/docs/en/cpfs?topic=online-upgrading-foundational-services-from-operator-release

What does IBM MQ provide within the Cloud Pak for Integration?

Works with a limited range of computing platforms.

A versatile messaging integration from mainframe to cluster.

Cannot be deployed across a range of different environments.

Message delivery with security-rich and auditable features.

Within IBM Cloud Pak for Integration (CP4I) v2021.2, IBM MQ is a key messaging component that ensures reliable, secure, and auditable message delivery between applications and services. It is designed to facilitate enterprise messaging by guaranteeing message delivery, supporting transactional integrity, and providing end-to-end security features.

IBM MQ within CP4I provides the following capabilities:

Secure Messaging – Messages are encrypted in transit and at rest, ensuring that sensitive data is protected.

Auditable Transactions – IBM MQ logs all transactions, allowing for traceability, compliance, and recovery in the event of failures.

High Availability & Scalability – Can be deployed in containerized environments using OpenShift and Kubernetes, supporting both on-premises and cloud-based workloads.

Integration Across Multiple Environments – Works across different operating systems, cloud providers, and hybrid infrastructures.

Option A (Works with a limited range of computing platforms) – Incorrect: IBM MQ is platform-agnostic and supports multiple operating systems (Windows, Linux, z/OS) and cloud environments (AWS, Azure, Google Cloud, IBM Cloud).

Option B (A versatile messaging integration from mainframe to cluster) – Incorrect: While IBM MQ does support messaging from mainframes to distributed environments, this option does not fully highlight its primary function of secure and auditable messaging.

Option C (Cannot be deployed across a range of different environments) – Incorrect: IBM MQ is highly flexible and can be deployed on-premises, in hybrid cloud, or in fully managed cloud services like IBM MQ on Cloud.

IBM MQ Overview

IBM Cloud Pak for Integration Documentation

IBM MQ Security and Compliance Features

IBM MQ Deployment Options

Why the other options are incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Given the high availability requirements for a Cloud Pak for Integration deployment, which two components require a quorum for high availability?

Multi-instance Queue Manager

API Management (API Connect)

Application Integration (App Connect)

Event Gateway Service

Automation Assets

In IBM Cloud Pak for Integration (CP4I) v2021.2, ensuring high availability (HA) requires certain components to maintain a quorum. A quorum is a mechanism where a majority of nodes or instances must agree on a state to prevent split-brain scenarios and ensure consistency.

IBM MQ Multi-instance Queue Manager is designed for high availability.

It runs in an active-standby configuration where a shared storage is required, and a quorum ensures that failover occurs correctly.

If the primary queue manager fails, quorum logic ensures that another instance assumes control without data corruption.

API Connect operates in a distributed cluster architecture where multiple components (such as the API Manager, Analytics, and Gateway) work together.

A quorum is required to ensure consistency and avoid conflicts in API configurations across multiple instances.

API Connect uses MongoDB as its backend database, and MongoDB requires a replica set quorum for high availability and failover.

Why "Multi-instance Queue Manager" (A) Requires a Quorum?Why "API Management (API Connect)" (B) Requires a Quorum?

Why Not the Other Options?Option

Reason for Exclusion

C. Application Integration (App Connect)

While App Connect can be deployed in HA mode, it does not require a quorum. It uses Kubernetes scaling and load balancing instead.

D. Event Gateway Service

Event Gateway is stateless and relies on horizontal scaling rather than quorum-based HA.

E. Automation Assets

This component stores automation-related assets but does not require quorum for HA. It typically relies on persistent storage replication.

Thus, Multi-instance Queue Manager (IBM MQ) and API Management (API Connect) require quorum to ensure high availability in Cloud Pak for Integration.

IBM MQ Multi-instance Queue Manager HA

IBM API Connect High Availability and Quorum

CP4I High Availability Architecture

MongoDB Replica Set Quorum in API Connect

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

TESTED 18 Apr 2025

Copyright © 2014-2025 DumpsBuddy. All Rights Reserved